NVMe "Disk" Bandwidth and Latency for Batched Block Requests

Posted on 2019-03-22 16:00 by Timo Bingmann at Permlink with 0 Comments. Tags: #c++ #stxxl #thrill

Last week I had the pleasure of being invited to the Dagstuhl seminar 19111 on Theoretical Models of Storage Systems. I gave a talk on the history of STXXL and Thrill, but also wanted to include some current developments. Most interesting I found is the gap closing between RAM and disk bandwidth due to the (relatively) new Non-Volatile Memory Express (NVMe) storage devices.

Since I am involved in many projects using external memory, I decided to perform a simple set of fundamental experiments to compare rotational disks and newer solid-state devices (SSDs). The results were interesting enough to write this blog article about.

Among the tools of STXXL/FOXXLL there are two benchmarks which perform two distinct access patterns: Scan (benchmark_disks) and Random (benchmark_disks_random).

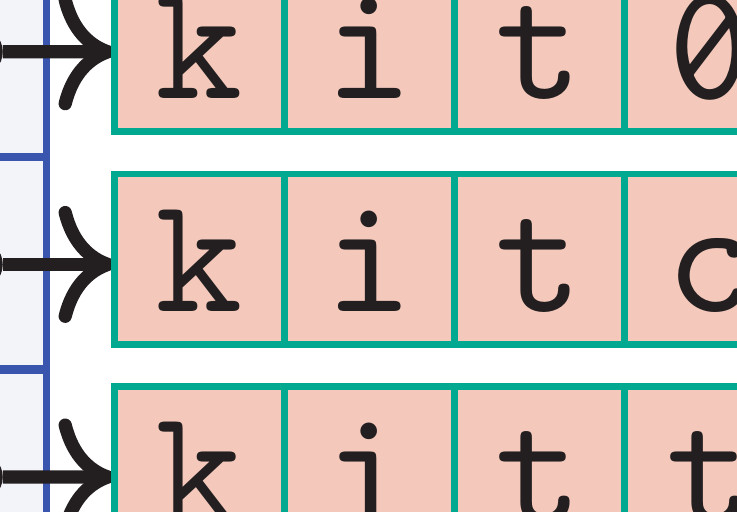

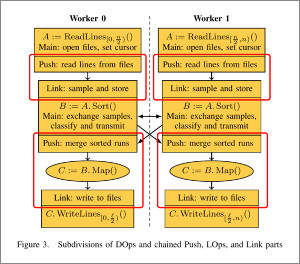

- In Scan a batch of k sequential blocks of size B are read or written in order.

- In Random a batch of k randomly selected blocks of size B from a span of size N are read or written.

The Scan experiment is probably the fastest access method as it reads or writes the disk (actually: storage device) sequentially. The Random experiment is good to determine the access latency of the disk as it first has to seek to the block and then transfer the data. Notice that the Random experiment does batched block accesses like one would perform in a query/answering system where the next set of random blocks depends on calculations performed with the preceding blocks (like in a B-Tree). This is a different experiment than done by most "throughput" measurement tools which issue a continuous stream of random block accesses.