Thrill YouTube Tutorial: High-Performance Algorithmic Distributed Computing with C++

Posted on 2020-06-01 11:13 by Timo Bingmann at Permlink with 0 Comments. Tags: #talk #university #thrill

This post announces the completion of my new tutorial presentation and YouTube video: "Thrill Tutorial: High-Performance Algorithmic Distributed Computing with C++".

YouTube video link: https://youtu.be/UxW5YyETLXo (2h 41min)

Slide Deck: slides-20200601-thrill-tutorial.pdf (21.3 MiB) (114 slides)

In this tutorial we present our new distributed Big Data processing framework called Thrill (https://project-thrill.org). It is a C++ framework consisting of a set of basic scalable algorithmic primitives like mapping, reducing, sorting, merging, joining, and additional MPI-like collectives. This set of primitives can be combined into larger more complex algorithms, such as WordCount, PageRank, and suffix sorting. Such compounded algorithms can then be run on very large inputs using a distributed computing cluster with external memory.

After introducing the audience to Thrill we guide participants through the initial steps of downloading and compiling the software package. The tutorial then continues to give an overview of the challenges of programming real distributed machines and models and frameworks for achieving this goal. With these foundations, Thrill's DIA programming model is introduced with an extensive listing of DIA operations and how to actually use them. The participants are then given a set of small example tasks to gain hands-on experience with DIAs.

After the hands-on session, the tutorial continues with more details on how to run Thrill programs on clusters and how to generate execution profiles. Then, deeper details of Thrill's internal software layers are discussed to advance the participants' mental model of how Thrill executes DIA operations. The final hands-on tutorial is designed as a concerted group effort to implement K-means clustering for 2D points.

The video on YouTube (https://youtu.be/UxW5YyETLXo) contains both presentation and live-coding sessions. It has a high information density and covers many topics.

Table of Contents

- Thrill Motivation Pitch

- Introduction to Parallel Machines

- 0:20:20 The Real Deal: Examples of Machines

- 0:24:50 Networks: Types and Measurements

- 0:31:47 Models

- 0:35:58 Implementations and Frameworks

- The Thrill Framework

- 0:39:28 Thrill's DIA Abstraction and List of Operations

- 0:44:00 Illustrations of DIA Operations

- 0:55:32 Tutorial: Playing with DIA Operations

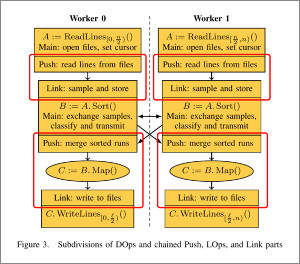

- 1:08:21 Execution of Collective Operations in Thrill

- 1:21:10 Tutorial: Running Thrill on a Cluster

- 1:27:02 Tutorial: Logging and Profiling

- 1:32:14 Going Deeper into Thrill

- 1:32:49 Layers of Thrill

- 1:37:59 File - Variable-Length C++ Item Store

- 1:43:54 Readers and Writers

- 1:46:43 Thrill's Communication Abstraction

- 1:48:07 Stream - Async Big Data All-to-All

- 1:49:46 Thrill's Data Processing Pipelines

- 1:51:29 Thrill's Current Sample Sort

- 1:54:14 Optimization: Consume and Keep

- 1:59:34 Memory Allocation Areas in Thrill

- 2:01:17 Memory Distribution in Stages

- 2:02:22 Pipelined Data Flow Processing

- 2:08:48 ReduceByKey Implementation

- 2:12:06 Tutorial: First Steps towards k-Means

- 2:35:56 Conclusion

- 0:39:28 Thrill's DIA Abstraction and List of Operations