Speedtest and Comparsion of Open-Source Cryptography Libraries and Compiler Flags

Posted on 2008-07-14 14:53 by Timo Bingmann at Permlink with 12 Comments. Tags: #cryptography #crypto-speedtest

Abstract

There are many well-known open-source cryptography libraries available, which implement many different ciphers. So which library and which cipher(s) should one use for a new program? This comparison presents a wealth of experimentally determined speed test results to allow an educated answer to this question.

The speed tests encompass eight open-source cryptography libraries of which 15 different ciphers are examined. The performance experiments were run on five different computers which had up to six different Linux distributions installed, leading to ten CPU / distribution combinations tests. Ultimately the cipher code was also compiled using four different C++ compilers with 35 different optimization flag combinations.

Two different test programs were written: the first to verify cipher implementations against each other, the second to perform timed speed tests on the ciphers exported by the different libraries. A cipher speed test run is composed of both encryption and decryption of a buffer. The buffer length is varied from 16 bytes to 1 MB in size.

Many of the observed results are unexpected. Blowfish turned out to be the fastest cipher. But cipher selection cannot be solely based on speed, other parameters like (perceived) strength and age are more important. However raw speed data is important for further discussion.

When regarding the eight selected cryptography libraries, one would expect all libraries to contain approximately the same core cipher implementation, as all calculation results have to be equal. However the libraries' performances varies greatly. OpenSSL and Beecrypt contain implementations with highest optimization levels, but the libraries only implement few ciphers. Tomcrypt, Botan and Crypto++ implement many different ciphers with consistently good performance on all of them. The smaller Nettle library trails somewhat behind, probably due to it's age.

The first real surprise of the speed comparison is the extremely slow test results measured on all ciphers implemented in libmcrypt and libgcrypt. libmcrypt's ciphers show an extremely long start-up overhead, but once it is amortized the cipher's throughput is equal to faster libraries. libgcrypt's results on the other hand are really abysmal and trail far behind all the other libraries. This does not bode well for GnuTLS's SSL performance. And libmcrypt's slow start promises bad performance for thousands of PHP applications encrypting small chunks of user data.

Most of the speed test experiments were run on Gentoo Linux, which compiles all programs from source with user-defined compiler flags. This contrasts to most other Linux distributions which ship pre-compiled binary packages. To verify that previous results stay valid on other distributions the experiments were rerun in chroot-jailed installations. As expected Gentoo Linux showed the highest performance, closely followed by the newer versions of Ubuntu (hardy) and Debian (lenny). The oldest distribution in the test, Debian etch, showed nearly 15% slower speed results than Gentoo.

To make the results transferable onto other computers and CPUs the speed test experiments were run on five different computers, which all had Debian etch installed. No unexpected results were observable: all results show the expected scaling with CPU speed. Most importantly no cache effects or special speed-ups were detectable. Most robust cipher was CAST5 and the one most fragile to CPU architecture was Serpent.

Most interesting for applications outside the scope of cipher algorithms was the compiler and optimization flags comparison. The speed test code and cipher library Crypto++ was compiled with many different compiler / flags combinations. It was even compiled and speed measured on Windows to compare Microsoft's compiler with those available on Linux.

The experimental results showed that Intel's C++ compiler produces by far the most optimized code for all ciphers tested. Second and third place goes to Microsoft Visual C++ 8.0 and gcc 4.1.2, which generate code which is roughly 16.5% and 17.5% slower than that generated by Intel's compiler. gcc's performance is highly dependent on the amount to optimization flags enabled: a simple -O3 is not sufficient to produce well optimized binary code. Relative to gcc 4.1.2 the older compiler version 3.4.6 is about 10% slower on most tests.

All in all the experimental results provide some hard numbers on which to base further discussion. Hopefully some of the libraries' spotlighted deficits can be corrected or at least explained. Lastly the most concrete result: the cipher and library I will use for my planned application is Serpent from the Botan library.

Download Source

| Cryptography Library Speedtest Version 0.1 (current) released 2008-07-14 | ||

| Source code archive: | Download crypto-speedtest-0.1.tar.bz2 (4680kb) MD5: 37fea6c2623da97f09e85401c29a9768 | Browse online |

Table of Contents

- Motivation

- Description of Libraries, Ciphers and Compilers

- Test Method

- Test Environment

- Observation and Discussion

- Conclusion

- Appendix

1 Motivation

Currently I am working on a program dubbed CryptoTE. It is a text editor which automatically saves documents and attachments in an encrypted container file. The idea is to transparently encrypt sensitive passwords and other data so other, possibly malicious programs (and users) cannot read the text. Yes, I know there are many "Password Keeper" programs available on the Internet. However CryptoTE, being a text editor, will be much simpler: it will not force you to structure your password data, no tables, attributes, etc. Last reason: I need it myself. CryptoTE will be available on idlebox.net when finished.

During current development of CryptoTE I have to decide, which cryptography library and which cipher(s) to choose for encrypting data. Currently I don't plan on having the user select one of 100 different ciphers, and thus leave cipher selection to some arbitrary choice of the user. ("Blowfish looks pretty, reminds me of my last diving trip, I'll take that one.") So the list of available ciphers will be very short. I also don't care for the following misleading entry on the features list: "This super program has 1000 different ciphers" (which are actually just implemented by the library it uses).

The basic idea before starting this extensive comparison, was to use one of the currently strongest (public) ciphers: Rijndael (AES), Serpent or Twofish. Easy so far, but which library to use? Probably libgcrypt or libmcrypt, because the first is used by GnuTLS and the second is a long existing PHP extension used by many, many web applications.

However the results of this speed comparison test shows that this choice would not have been optimal. It turned out that there are substantial differences in the different libraries encryption speeds.

Once the speed test was written, the initial results showed such surprising differences, that I extended the test. I ran the library speed test on different Linux distributions and different CPUs / computers. This should determine if the differences were specific to my favorite distribution (Gentoo) or to my desktop computer's CPU architecture.

Testing different distributions however is not really fair. Most important criterion for the cipher speed are the compiler flags used to compile the library sources during packaging. So I expected a distribution using the -O2 flag to show lower speeds than a distribution compiled with -O3 (like my Gentoo is).

Thus I further extended the speed test to compare three different custom cipher implementations across different compilers and compiler flags, in the end even running the speed test on Windows (to satisfy the curiosity of a friend of mine). Here too the speed test results are unexpected.

2 Description of Libraries, Ciphers and Compilers

The speed comparison test was performed using many different ciphers found in well-known open source cryptography libraries. It was run on five different CPUs and six different Linux distributions to reveal details about distribution packaging, compiler flags and CPU attributes.

This section will describe in short which libraries, ciphers and compilers where compared.

2.1 Libraries Tested

| Library | Versions | Language | License | Reason |

|---|---|---|---|---|

| libgcrypt | 1.2.3 / 1.2.4 / 1.4.0 | C | LGPL | Used by GnuTLS, which I prefer over OpenSSL because it throws no valgrind memory errors. |

| libmcrypt | 2.5.7 / 2.5.8 | C | LGPL | Long existing PHP extension. Used by lots and lots of web sites |

| Botan | 1.6.1 / 1.6.2 / 1.6.3 | C++ | BSD | Newer library. More liberal license. Good C++ interface instead of old-fashion C. |

| Crypto++ | 5.2.1c2a / 5.5 / 5.5.1 / 5.5.2 | C++ | Special | Another C++ library which seems to have a more Win32-ish background. |

| OpenSSL | 0.9.8b / 0.9.8c / 0.9.8e / 0.9.8g | C | Special | Well, it's OpenSSL. Just the low-level cipher interface is tested. |

| Nettle | 1.14.1 / 1.15 | C | LGPL | Very small(!) low-level library. |

| Beecrypt | 4.1.2 | C | LGPL | Another small and possibly fast library. |

| Tomcrypt | 1.06 / 1.17 | C | Public Domain | Least entangled library of cipher implementations. |

The license of all these libraries are problematic, because the actual encryption cipher source code is often in the public domain. However, that is some lawyer's job to figure out. For a detailed listing of each libraries' versions see the extra page: Distribution Package Versions.

Furthermore three custom cipher implementations were included in the speed test. These custom implementations are basically the publicly available original cipher source code modified and extended by myself for direct inclusion in my C++ programs. Included are:

- Optimized Rijndael (AES) by Vincent Rijmen, Antoon Bosselaers and Paulo Barreto.

- Serpent cipher optimized by Dr. Brian Gladman.

- Another implementation of the Serpent cipher extracted from Botan. This is included to compare compiler settings and also because this implementation will be used in CryptoTE.

2.2 Ciphers Tested

The ciphers available in the different libraries vary greatly. Mostly I chose to run a speed test on the strongest ciphers included in the library. All ciphers are tested in ECB (Electronic Codebook) mode, because it is available everywhere and best tests the cipher implementation itself.

| Cipher | Blocksize (bits) | Keysize (bits) | Libgcrypt | Libmcrypt | Botan | Crypto++ | OpenSSL | Nettle | Beecrypt | Tomcrypt |

|---|---|---|---|---|---|---|---|---|---|---|

| Rijndael AES | 128 | 256 | • | • | • | • | • | • | • | • |

| Serpent | 128 | 256 | • | • | • | • | • | |||

| Twofish | 128 | 256 | • | • | • | • | • | • | ||

| CAST6 (256) | 128 | 256 | • | • | • | |||||

| GOST | 64 | 256 | • | • | • | |||||

| Safer+ | 128 | 256 | • | • | ||||||

| Loki97 | 128 | 256 | • | |||||||

| Anubis | 128 | 256 | • | |||||||

| Blowfish | 64 | 128 | • | • | • | • | • | • | • | • |

| CAST5 (128) | 64 | 128 | • | • | • | • | • | • | • | |

| 3DES | 64 | 168 | • | • | • | • | • | • | • | |

| XTEA | 64 | 128 | • | • | • | • | ||||

| Noekeon | 128 | 128 | • | |||||||

| Khazad | 64 | 128 | • | |||||||

| Skipjack | 64 | 80 | • |

2.3 Compiler and Flags Tested

Quite late during this speed test process, I decided to also test different compilers and compiler flag combintations. gcc was available in two different versions on my Gentoo system. Further I installed the Intel C/C++ Compiler using their "Non-Commercial Software Development" license. Lastly a friend wanted me to compare it with Visual C++, of which I have an academic edition.

| Name | Version | Platform | Flags Tested |

|---|---|---|---|

| GNU Compiler Collection | 4.1.2 | Gentoo Linux | -O0, -O1, -O2, -O3, -Os,-O2 -march=pentium4,-O3 -march=pentium4,-O2 -march=pentium4 -fomit-frame-pointer,-O3 -march=pentium4 -fomit-frame-pointer,-O2 -march=pentium4 -msse -msse2 -msse3 -mfpmath=sse -fomit-frame-pointer,-O3 -march=pentium4 -msse -msse2 -msse3 -mfpmath=sse -fomit-frame-pointer,-O2 -march=pentium4 -msse -msse2 -msse3 -mfpmath=sse -fomit-frame-pointer -funroll-loops,-O3 -march=pentium4 -msse -msse2 -msse3 -mfpmath=sse -fomit-frame-pointer -funroll-loops |

| GNU Compiler Collection | 3.4.6 | Gentoo Linux | -O0, -O1, -O2, -O3, -Os,-O2 -march=pentium4,-O3 -march=pentium4,-O2 -march=pentium4 -fomit-frame-pointer,-O3 -march=pentium4 -fomit-frame-pointer,-O2 -march=pentium4 -msse -msse2 -msse3 -mfpmath=sse -fomit-frame-pointer,-O3 -march=pentium4 -msse -msse2 -msse3 -mfpmath=sse -fomit-frame-pointer,-O2 -march=pentium4 -msse -msse2 -msse3 -mfpmath=sse -fomit-frame-pointer -funroll-loops,-O3 -march=pentium4 -msse -msse2 -msse3 -mfpmath=sse -fomit-frame-pointer -funroll-loops |

| Intel C/C++ Compiler | 10.0 | Gentoo Linux | -O0, -O1, -O2, -O3, -Os |

| Microsoft Visual C++ | 8.0 (2005) | Windows XP | /Od, /O1, /O2, /Ox |

The basic -O# optimization flags were tested on all three compilers. Some further gcc flags were also tested, as the default -O# are still quite restrictive. Furthermore (not included in the preceding list) I ran the speed tests on MinGW to double-check the timer resolution on Windows.

3 Test Method

Two programs are used to test and compare the cipher implementations.

3.1 verify

The first test is not a speed measurement, instead the program verify is used to validate the different libraries against each other. Some fixed input is run through different libraries and the encrypted output is compared. This is done to check the different implementation (especially those which I modified) for correctness.

Verify only tests five ciphers: Rijndael, Serpent, Twofish, Blowfish and 3DES. Rijndael, Blowfish and 3DES are implemented in almost every library and Serpent is the cipher I ultimately chose. Twofish and Blowfish are surprisingly fast in some results, so I had to check that they actually did some work.

For each library or custom implementation verify takes a 128 KB buffer filled with a specific pattern. It then encrypts the buffer and compares the result with the another encrypted buffers, thus checking that both (or more) implementations returned the same results. Then the cipher is used to decrypt the buffer again, and the buffer contents is verified to be the original data pattern.

The following implementations are checked against each other:

- Rijndael (AES): Custom(Rijmen), libgcrypt, libmcrypt, Botan, Crypto++, OpenSSL, Nettle, Beecrypt, Tomcrypt. (That are all libraries)

- Serpent: Custom(Gladman), Custom(Botan), libgcrypt, libmcrypt, Botan, Crypto++, Nettle.

- Twofish: libgcrypt, libmcrypt, Botan, Crypto++, Tomcrypt.

- Blowfish: libgcrypt, libmcrypt, Botan, Crypto++, Nettle, Tomcrypt.

- 3DES: libgcrypt, libmcrypt, Botan, Crypto++, OpenSSL, Nettle, Tomcrypt. (All except Beecrypt)

3.2 speedtest

The core of each speed test consists of one encryption pass directly followed by a decryption pass. Thus both encryption and decryption speed of the cipher is tested and results will reflect the time to encrypt plus decrypt. The passes are performed on one buffer filled with a pattern.

The first statistic variable is the buffer size en/decrypted. It ranges from 16 bytes to 1 MB. Only the buffer sizes 24+n with n = 0 .. 16 are measured. By also testing very small buffers, library overhead and cipher key preprocessing/initialization time is measured indirectly. This start-up overhead becomes smaller as the buffers get larger.

To make results more accurate with the inaccurate time measurement device (gettimeofday()), small buffer size en/decryption is repeated a large number of times. The total run of all repeats is then divides by the number of repeatitions. The number of repeatition begins so that at least 64 KB of data is processed. If one repeated run takes less than 0.7 seconds, the same test is redone with twice the amount of data processed. This way the repetition loop is increased until processing takes a sufficiently long time to allow good measurement with only moderate timer resolution.

Furthermore each buffer size (including all internal repetitions) is tested 16 times. The different buffer sizes are not tested individually, but different sizes consecutively and then all are repeated.

The time is measured on Linux using gettimeofday() and on Windows using timeGetTime(). The results are written out to a text file for further processing with gnuplot. Each result includes the buffer size, average, standard deviation, minimum and maximum; both the absolute time measured and the reached throughput speed are printed into the result file.

4 Test Environment

4.1 CPUs and Distributions

The speed measurements were performed on five different computers available to me. They have five different CPUs:

- Intel Pentium 4 at 3.2 GHz with 1024 KB L2 cache - Short: p4-3200

- Intel Pentium 3 (Mobile) at 1.0 GHz with 512 KB L2 cache - Short: p3-1000

- Intel Pentium 2 at 300 MHz with 512 KB L2 cache - Short: p2-300

- Intel Celeron at 2.66 GHz with 256 KB L2 cache - Short: cel-2660

- AMD Athlong XP 2000+ with 256 KB L2 cache - Short: ath-2000

To compare distribution package speed six different Linux distributions where used:

- Gentoo stable

- Debian 4.0 etch (currently

stable) - Debian lenny (currently

testing) - Ubuntu 7.10 Gutsy Gibbon

- Ubuntu 8.04 Hardy Heron

- Fedora 8

For a detailed listing of the different libraries package versions used in the speed tests, see the extra page: Distribution Package Versions.

4.2 Basic Test Program Runs and Plots (results)

The speedtest program was run many times. Small code changes and adaptions required many re-runs during the whole testing process. The final runs were performed from 2008-04-09 to 2008-04-22. They produced the text result files found in the downloadable package.

The text result files contain the raw time and speed numbers. Two different gnuplot scripts are included, which visualize the numbers to show different aspects.

The results directory of the package contains PDFs named <cpu>-<distro>.pdf and <cpu>-<distro>-all.pdf (e.g. p4-3200-gentoo.pdf). These graphs read result files from only one run of all speedtests; the first plots contain the different ciphers contained in each library. The second part then groups the results by cipher: displaying the speed of the different libraries.

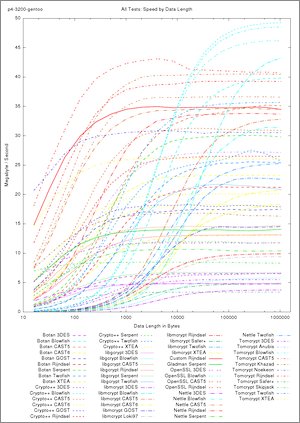

The PDFs <cpu>-<distro>-all.pdf contain all libraries and all ciphers run on a single CPU/distribution combination. These graphs contain 57 plot lines and are really full. Their size is trimmed to be printed on A4 paper.

To compare the different CPU/Distribution combinations against each other, two further PDFs are included: sidebyside-comparison.pdf and distrospeed.pdf.

The sidebyside-comparison.pdf contains eight plots on each page. The plots of all <cpu>-<distro>.pdf are grouped together and plots displaying the same cipher/libraries are put on one page. This way a direct side-by-side comparison can be done.

More individually the distrospeed.pdf contains plots which show the same library as run on different CPU/distro combinations. Not all combinations are included, only those run on my p4-3200 desktop computer are compared.

4.3 Compiler / Flags Test Program Runs and Plots (results-flags)

The test runs to compare different compilers and compiler flag sets are also included in the package under a different results directory. The final runs of this result set were performed on 2008-05-26. All compiler tests were run on the same CPU / computer: p4-3200 - Pentium 4 3.2 GHz

The biggest issue was to automate compilation of both the speedtest code and the cryptography libraries with all the different flags and compilers. This was not done for all cryptography libraries, but only for Crypto++. It's configuration script was easy and allowed easy exact definition of the compiler and flags (other libraries' configure stripped out or automatically added optimization flags). Crypto++ also provided project files for Visual C++.

The results-flags directory contains some compilation automation scripts and a perl/gnuplot script. The script calls gnuplot subprograms and feeds generated gnuplot command into the plotter to create the two PDFs named flags.pdf and flags-gcc3.4.pdf.

flags.pdf is the primary result file and compares the different compilers and compiler flags for all the different ciphers available.

flags-gcc3.4.pdf was only used to check MinGW's special gcc 3.4.5 against the gcc 3.4.6 on Gentoo Linux. Thus the timer resolution of Windows and Linux was double-checked so the results of Visual C++ are comparable to those run on Linux.

5 Observation and Discussion

This section describes the observations and results found in the different graphs. Please note that all these results are subjective and statistically irrelevant because of the small number of computers tested. However they do give insight into the problems of encryption performance.

All plot bitmaps in the following text are linked to their full-scale PDF originals.

5.1 Ciphers Compared

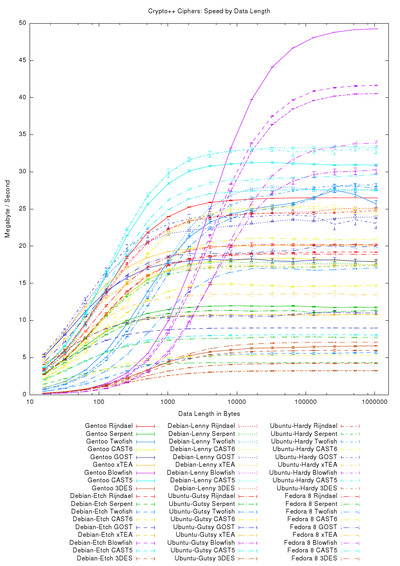

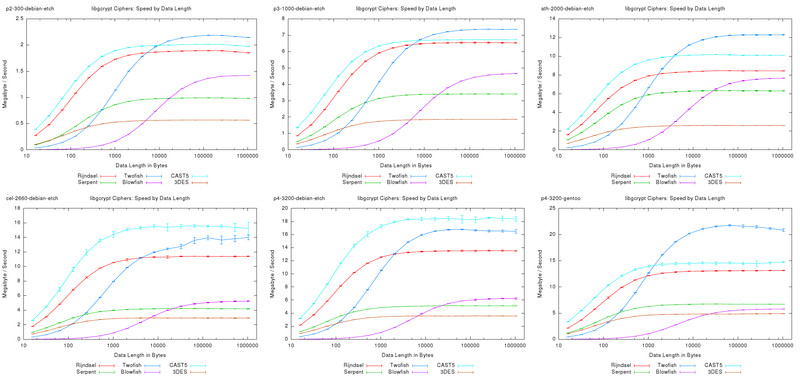

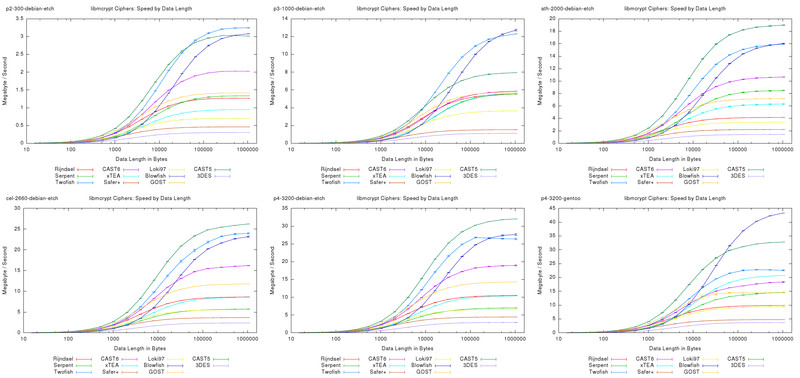

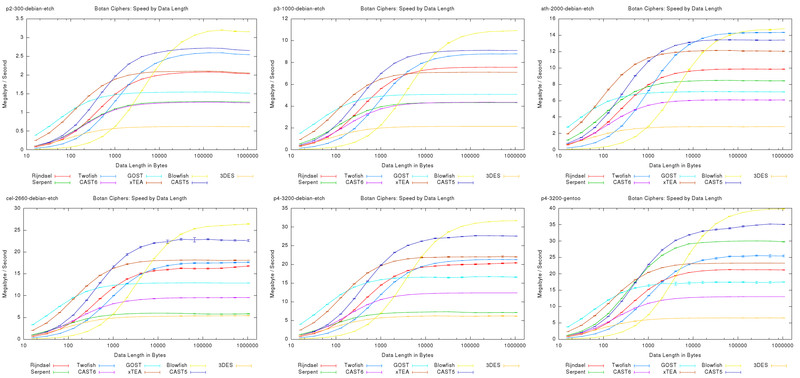

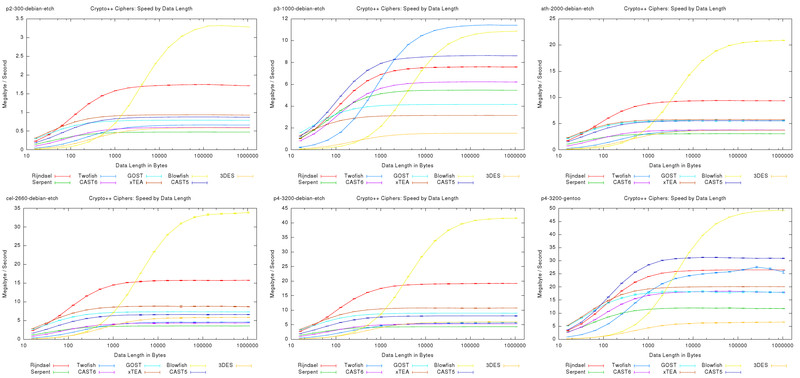

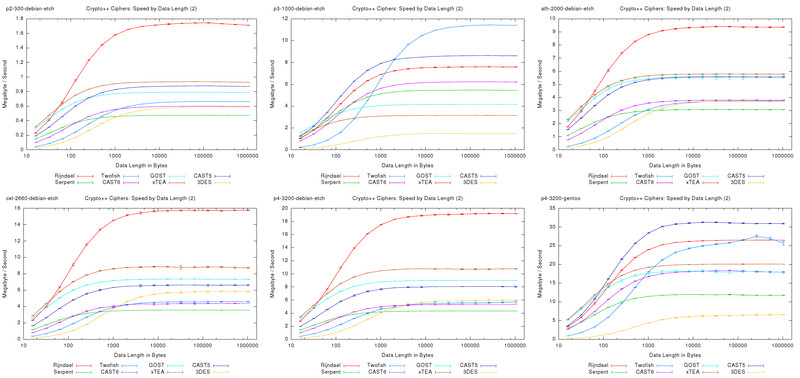

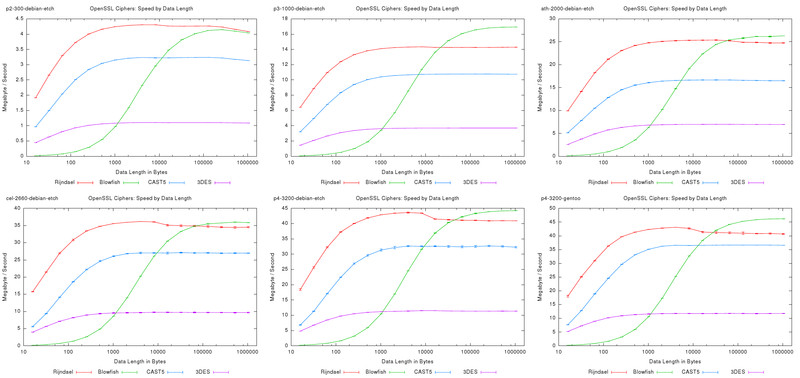

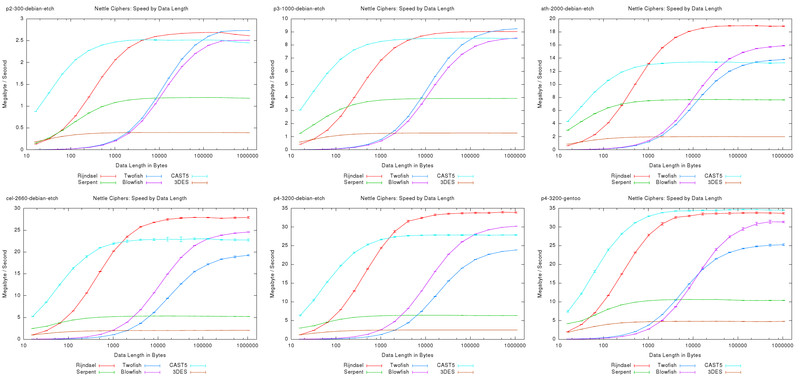

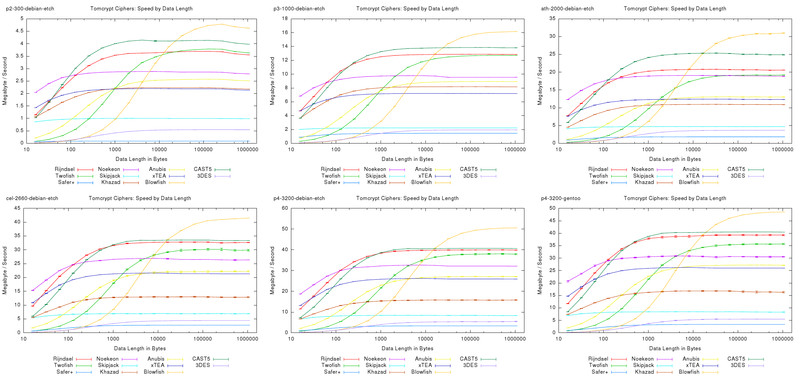

The first set of plots contain straight-forward performance data of the different ciphers provided by each library.

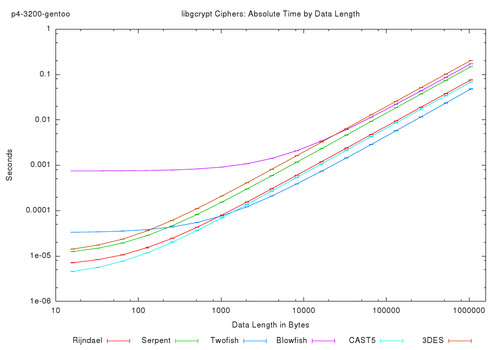

The plot above displays absolute time in seconds required to run one unit of the speed test. One speed test unit consists of encryption and decryption of a buffer with specific length. The length of the buffer tested is the value on the x-axis and ranges from 16 to 1024768 bytes. The buffer lengths are plotted logarithmically, meaning each step to the right actually doubles the length. This way the small length are also showed in detail. In the above graph the average absolute time and the standard deviation (only visible as the small horizontal dashes) are plotted.

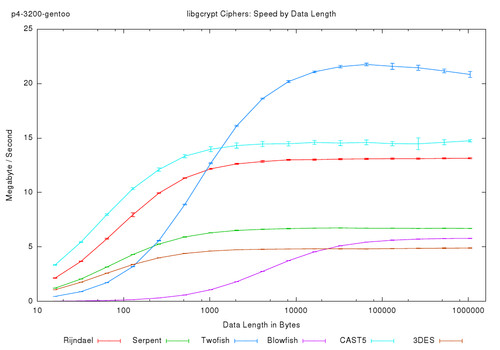

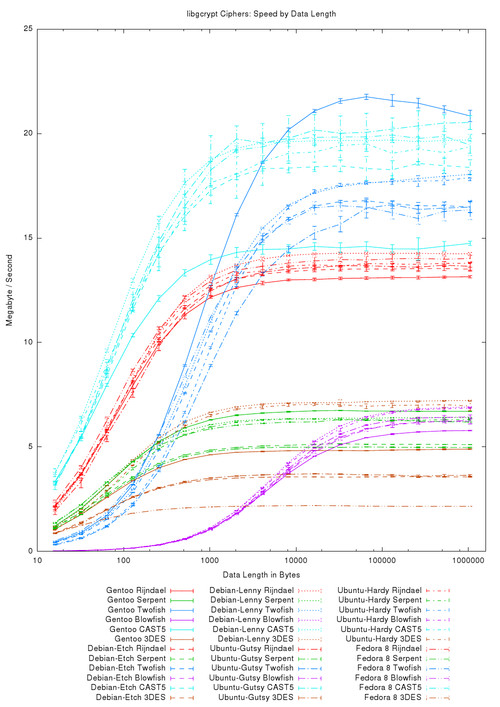

Much more informative is the above plot, which shows speed instead of absolute time. Where speed = bytes / time. The speed is displayed in megabyte per second. The above plot shows some ciphers available in the libgcrypt library.

First observations identifies Twofish to be the fastest cipher, once buffers are larger than about 9000 bytes. It achieves more than 20 MB/s throughput.

All ciphers require a start-up overhead, which explains the lower speed for small buffer. This start-up overhead mainly consists of cipher key-schedule context precalculations, but other things like library-overhead, memory-allocation and initialization also take their toll. Twofish and Blowfish need longest to start-up, all others are about the same. The start-up speed is visible in the graph by regarding how large a buffer must be to amortize the precalculations. This is where the plot line reaches it's horizontal value.

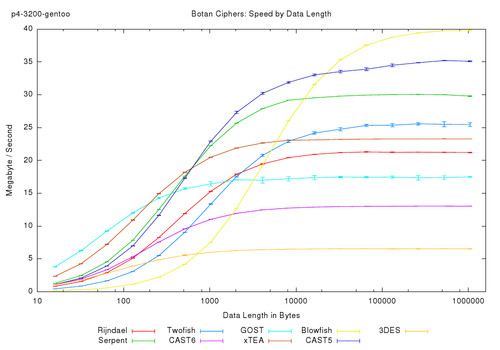

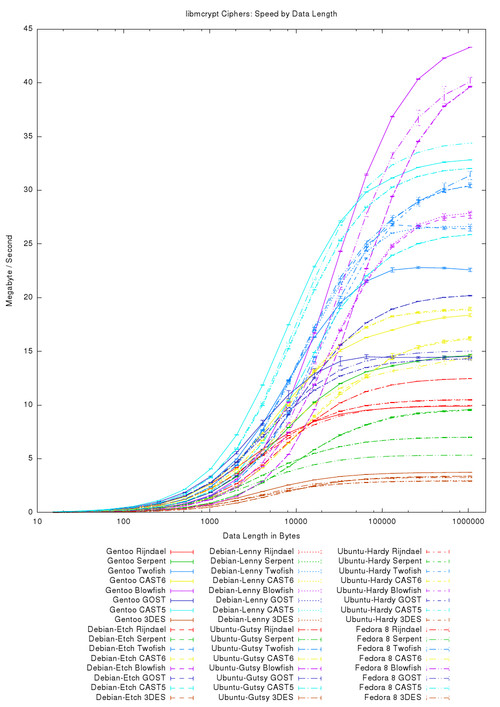

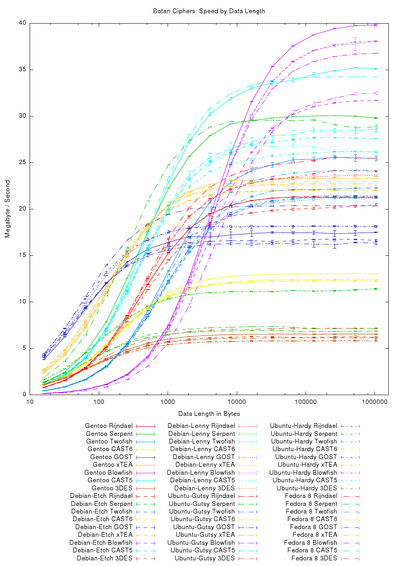

The above plot shows the ciphers tested in the Botan library. This plot shows a totally different picture than the previous one. This time Blowfish is the "winner". But, more important, all ciphers perform significantly better than the implementation in libgcrypt; of course one can only directly compare ciphers available in both libraries. Note the y-axis scale going up to 40 MB/s

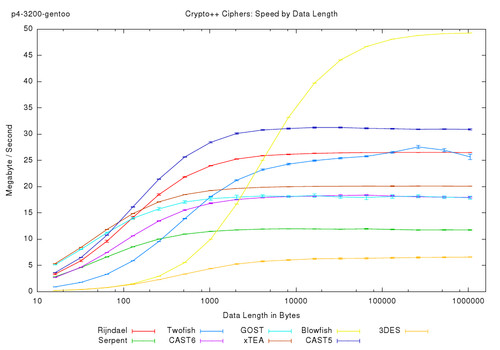

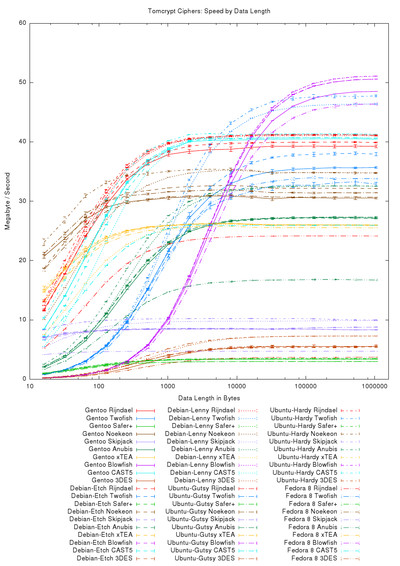

Similar speeds are observable in the above plot of the ciphers from the Crypto++ library. Best performing cipher is again Blowfish with almost 50 MB/s throughput. However it is also the slowest to start-up and reach it's peak performance. All other ciphers perform similarly with their counterparts in the Botan library, with the exception of Serpent. For some reason Serpent is less than half as fast as in the Botan library.

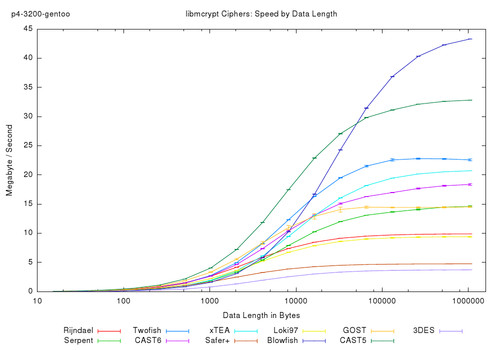

The real surprise of the speedtest is the above plot showing ciphers implemented in the libmcrypt library. The plot shows a massively higher start-up time for all ciphers in the library. Performance of libmcrypt for small buffers from 1000 to 10000 bytes is abysmally lower than for all other libraries. However after the start-up overhead is amortized, the cipher implementations reach the their expected speeds. I have no idea why libmcrypt has such an overhead during cipher allocation and initialization. This cannot be due to key schedule setup of similar cipher-related aspects, because they are common to all libraries. It must be something with (possibly special secure) memory allocation, cipher look-up, multi-thread mutex locking or other aspects of the library's organization. I rather not think about the myriads of web applications using libmcrypt via PHP to encrypt small bits of user data, which is stored in some SQL database.

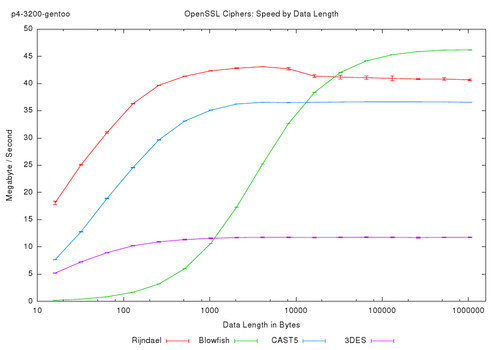

During my search for encryption libraries, I noted that the ubiquitous OpenSSL library also exports low-level cipher functions. Obviously the selection of ciphers in OpenSSL is directly linked to those required for SSL communication channels. It only provides 3DES, Blowfish, CAST5 and, in the newer OpenSSL versions, also AES. However the comparison of different libraries below will show that the relatively few cipher implementations in OpenSSL are highly optimized.

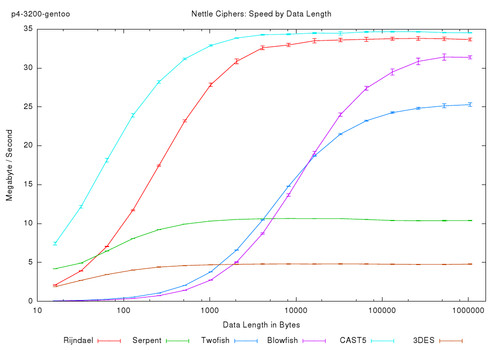

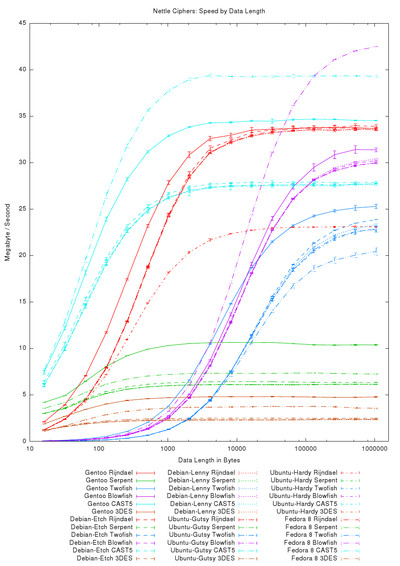

The nettle library contains well-performing implementation of the most common ciphers.

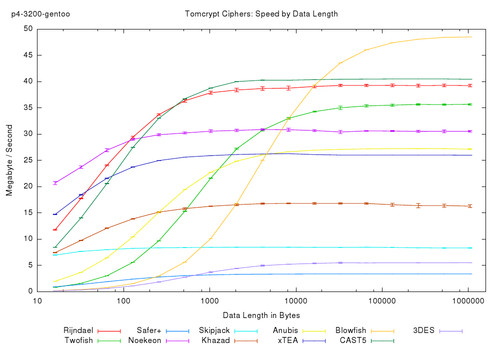

Last but one library in this first list is Tomcrypt. It contributes 11 ciphers to the speed test, some quite exotic like Noekeon, Skipjack and Anubis. Wikipedia brands Noekeon as a rather vulnerable cipher. Skipjack seems to have been a classified NSA cipher. Most interesting is Anubis which was (co-)created by the same person who initially designed AES (Rijndael).

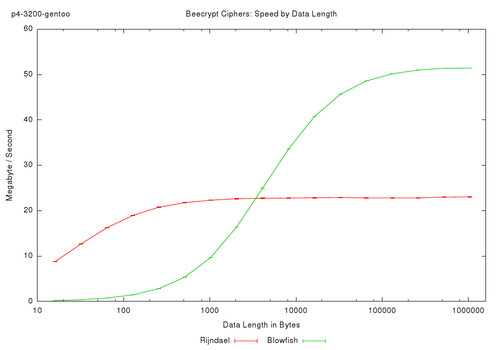

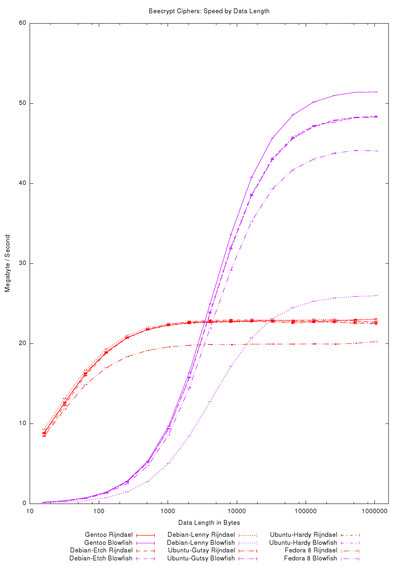

Last library is Beecrypt, which contains only two block ciphers. Thus the data plot contains only two lines. These results appear again in a better context in the library comparison below.

5.1.1 Sub-Conclusion

So which is the fastest cipher? That is a difficult question to answer. The main problem is that all test results above were generated on Gentoo. Gentoo is a Linux distribution compiled from source on each installation. So each Gentoo installation is to some degree different from others because compiler flags, used system libraries and other aspects can change quickly.

This is why the real "best" cipher speed comparison table is postponed to one of following sections, in which different distributions are compared. Jump to the "best cipher" table if you are impatient.

The following table shows the maximum speed in KB/s of each cipher implementation:

| libgcrypt | libmcrypt | Botan | Crypto++ | OpenSSL | Nettle | Beecrypt | Tomcrypt | Average | |

|---|---|---|---|---|---|---|---|---|---|

| Blowfish | 6,765 | 42,673 | 38,828 | 50,407 | 56,510 | 32,910 | 52,751 | 50,141 | 41,373 |

| CAST5 (128) | 22,061 | 33,538 | 36,306 | 32,522 | 34,775 | 35,264 | 36,798 | 33,037 | |

| Noekeon | 30,311 | 30,312 | |||||||

| Twofish | 24,947 | 22,235 | 26,160 | 28,360 | 26,208 | 35,548 | 27,243 | ||

| Rijndael AES | 13,925 | 10,398 | 21,917 | 27,111 | 45,461 | 34,754 | 23,684 | 40,119 | 27,171 |

| Anubis | 27,049 | 27,049 | |||||||

| XTEA | 21,168 | 23,849 | 20,603 | 26,882 | 23,126 | ||||

| CAST6 (256) | 18,207 | 13,298 | 18,539 | 16,681 | |||||

| GOST | 13,511 | 17,943 | 18,281 | 16,578 | |||||

| Serpent | 7,004 | 15,111 | 30,268 | 12,220 | 11,272 | 15,175 | |||

| Loki97 | 9,552 | 9,552 | |||||||

| Skipjack | 6,928 | 6,928 | |||||||

| 3DES | 5,195 | 3,525 | 6,979 | 6,702 | 11,940 | 4,845 | 5,683 | 6,410 | |

| Safer+ | 4,886 | 7,075 | 5,981 |

5.2 Libraries Compared by Cipher

The second set of plots compares the eight cryptography libraries against each other. One cipher is selected for comparison and all libraries providing this cipher are plotted into one chart. Obviously not all libraries provide all ciphers, so the plots have different amount of lines.

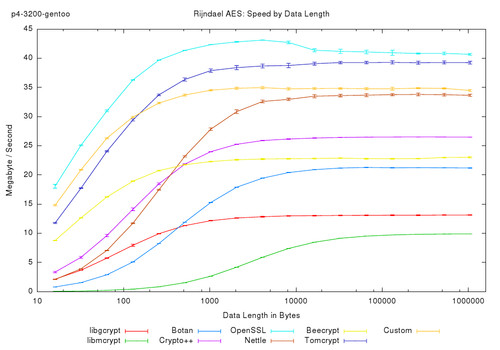

The first cipher to compare is Rijndael (AES). It is provided in all eight libraries, plus one extra custom implementation. The custom implementation is basically the original Rijndael code as released by the author. The only modification was to adapted it into a convenient C++ class.

The plot shows that the different libraries vary greatly in performance. In the range from 10 MB/s to more than 40 MB/s the libraries' performances are fairly distributed. Lowest in speed is libmcrypt, while the highest speed was achieved by OpenSSL. My custom implementation came in third. Start-up overhead was also highest in libmcrypt. Most other libraries show low start-up overhead.

All Rijndael implementations were verified against each other, which means that all work as expected and output the same cipher text for equal input. Thus the above results cannot show totally different calculations; the output is always the same.

This is maybe the most surprising result of the whole speed test: all cipher implementations' calculation results are verified to be exactly the same, yet the performance of the tested libraries vary so greatly that this seems absurd.

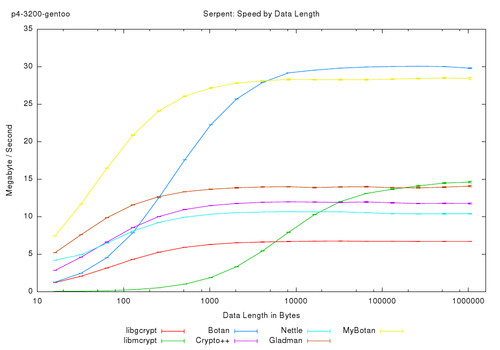

The second plot shows how fast the Serpent cipher is performed by the different libraries. For Serpent two different custom implementations are included. The first is optimized by Dr. Brian Gladman using different theoretic methods. The second was extracted from Botan, it will be used by my CryptoTE editor.

Serpent is a slower (and more secure) cipher than Rijndael. The average libraries all show a performance speed of less than 15 MB/s. However the big exception turned out to be Botan, showing almost twice the speed of all other libraries. With almost 30 MB/s it surpasses many Rijndael implementations. This is why I extracted it from Botan into a stand-alone C++ class for used in my programs. The speed of Botan was retained and for small buffers the start-up overhead introduced by Botan was eliminated. Whether this amazing performance is due to special CPU features or compiler flags will be discussed in the following sections.

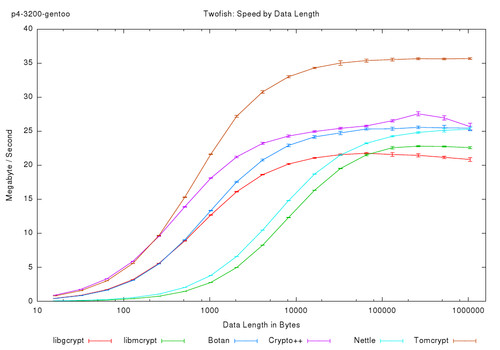

Twofish is another candidate from the AES-contest. It is implemented by six of the studied libraries. All show the same slow start-up of the cipher. It requires much preprocessing of the key material but achieves a higher throughput than Serpent for larger buffers. The speed achieved by all libraries is larger than 20 MB/s.

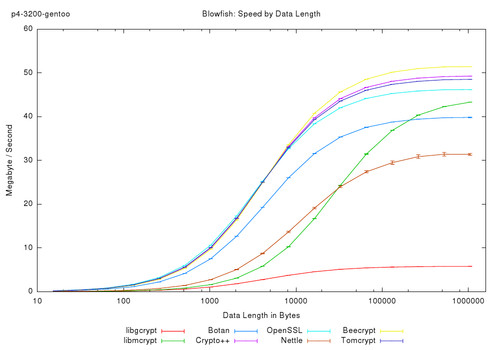

Predecessor of Twofish is the Blowfish cipher. Implemented by all eight examined libraries, it shows a similar slow start-up like Twofish. After amortizing the start-up overhead, Blowfish performs faster than Twofish. However the two should not be compared directly, because they perform in different security classes: Blowfish is old and Twofish is much newer and is generally regareded as more secure.

With almost 50 MB/s, Beecrypt's Blowfish implementation presented the highest achieved speed in the complete speed test on Gentoo. Close behind are Crypto++, Tomcrypt and OpenSSL. Compared to 50 MB/s libgcrypt's speed of roughly 6 MB/s, even on large buffer sizes, is really bad.

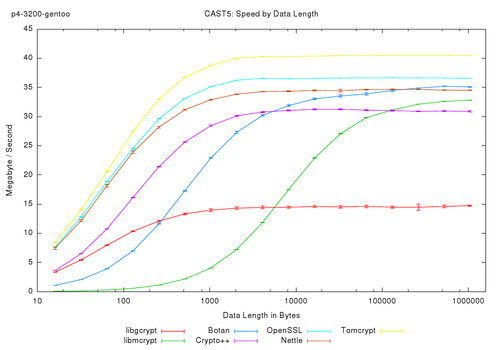

The cipher CAST5 is rather old, but still used e.g. by PGP / GnuPG for symmetric encryption. It is implemented by all libraries except beecrypt. This time all libraries perform similarly with an average speed of around 32 MB/s. Only libgcrypt falls out of the line.

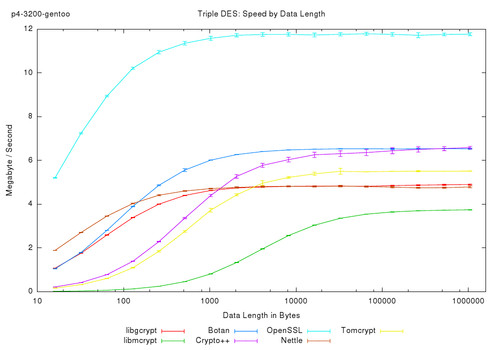

Last cipher to be compared is 3DES. Triple DES is very old compared to the others, however it is still widely used in VPN, SSL and hardware circuits. It is implemented by all libraries except beecrypt. Most unexpected is the speed of OpenSSL's implementation of 3DES. It beats all others by far. Obviously much optimization has been put into this implementation, probably because 3DES is one the encryption ciphers routinely used for SSL connections.

5.2.1 Sub-Conclusion

So which is the best / fastest library? That question can be answered here only for the Gentoo distribution. Comparing the libraries on Gentoo has the advantage, that Gentoo begin source-compiled can enable all optimizations and does not introduce performance problems imposed by pre-compiled binary packages or other problems, which the binary package maintainer may have created.

However how to compare a library like beecrypt which implements only two ciphers to a library which implements eleven ciphers? Obviously only the ciphers actually available can be scored. The scoring analysis was done as follows: First the average speed for each cipher was calculated. Then each library's speed delta (difference to the average) was regarded and added up. Thus the total difference all implemented ciphers was taken for the following ranking. Note that in this analysis, if a cipher is implemented by only one library, the cipher adds zero score to the total. All speeds are in KB/s:

| p4-3200-gentoo | Custom | OpenSSL | Beecrypt | Tomcrypt | Botan | Crypto++ | Nettle | libmcrypt | libgcrypt | Average |

|---|---|---|---|---|---|---|---|---|---|---|

| Blowfish | 47,299 / +6,884 | 52,662 / +12,247 | 49,685 / +9,269 | 40,781 / +365 | 50,448 / +10,033 | 32,170 / -8,246 | 44,355 / +3,940 | 5,922 / -34,493 | 40,415 | |

| CAST5 (128) | 37,528 / +4,480 | 41,494 / +8,446 | 36,062 / +3,014 | 32,018 / -1,030 | 35,514 / +2,466 | 33,618 / +570 | 15,103 / -17,945 | 33,048 | ||

| Noekeon | 31,621 / +0 | 31,621 | ||||||||

| Anubis | 27,898 / +0 | 27,898 | ||||||||

| Rijndael AES | 35,817 / +7,929 | 44,153 / +16,265 | 23,588 / -4,300 | 40,245 / +12,356 | 21,807 / -6,082 | 27,155 / -734 | 34,625 / +6,737 | 10,145 / -17,743 | 13,459 / -14,429 | 27,888 |

| Twofish | 36,545 / +9,462 | 26,189 / -894 | 28,224 / +1,141 | 25,903 / -1,180 | 23,352 / -3,731 | 22,283 / -4,799 | 27,082 | |||

| XTEA | 26,910 / +3,768 | 23,844 / +702 | 20,595 / -2,547 | 21,218 / -1,924 | 23,142 | |||||

| Khazad | 17,221 / +0 | 17,221 | ||||||||

| GOST | 17,912 / +735 | 18,736 / +1,559 | 14,885 / -2,293 | 17,178 | ||||||

| Serpent | 29,171 / +12,112 | 30,775 / +13,715 | 12,266 / -4,794 | 10,914 / -6,145 | 14,962 / -2,097 | 6,910 / -10,149 | 17,059 | |||

| CAST6 (256) | 13,349 / -3,647 | 18,824 / +1,827 | 18,816 / +1,820 | 16,996 | ||||||

| Loki97 | 9,637 / +0 | 9,637 | ||||||||

| Skipjack | 8,683 / +0 | 8,683 | ||||||||

| 3DES | 12,070 / +5,649 | 5,644 / -776 | 6,698 / +277 | 6,744 / +323 | 4,940 / -1,481 | 3,834 / -2,587 | 5,015 / -1,406 | 6,421 | ||

| Safer+ | 3,463 / -712 | 4,888 / +712 | 4,175 | |||||||

| Delta Sum | +20,041 | +33,278 | +7,947 | +41,813 | +8,186 | +5,779 | -7,848 | -23,332 | -83,221 | |

| Delta Average | +10,020 | +8,320 | +3,974 | +3,801 | +910 | +642 | -1,308 | -2,121 | -13,870 |

The winning "library" are my custom implementations. No surprise there, I wouldn't have included them in the test if they were slow.

So the real winner is OpenSSL. It's implementations are on average 8,320 KB/s faster than the average implementation. Second and third place are very close and go to Beecrypt and Tomcrypt.

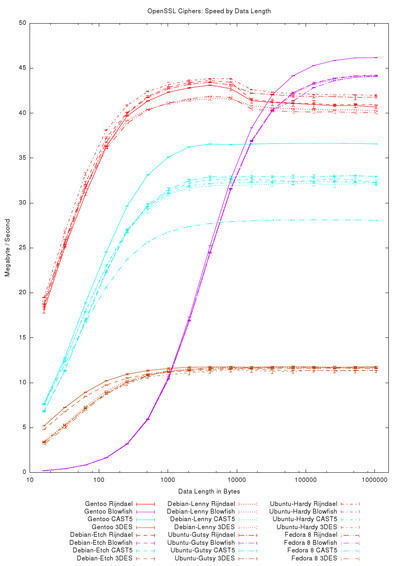

5.3 Findings On Different Distributions

First problem of the last two sections was that all libraries were taken from my Gentoo system. Gentoo however is a distribution where all packages are compiled from source using individual compiler flags. This approach is not shared by most other Linux distributions, which ship pre-compiled binary packages.

So are the finding above specific to my Gentoo system? Or even to the flags specified in my configurations?

To clarify this issue, five other Linux distributions were installed in chroot jails on the same computer. The speed test compiled in the chroot thus use the binary-distributed library versions.

The chart above plots the selected ciphers from libgcrypt run on the six Linux distributions on the same CPU. Each cipher has one distinct color and the six distributions are distinguished through the different line styles, solid dashed, dot-line-dot, etc. (Click on the plot for a zoomable PDF.)

One can see that some ciphers, that is Rijndael, Serpent and Blowfish, perform very similar on all platforms: their colored lines follow about the same path. Twofish too performs similar on all distributions except on Gentoo, probably due to extra compiler optimization. CAST5 shows a rather large variation of speeds; CAST5 also has large standard deviations compared to the others. 3DES also shows a rather large speed range.

All in all, no real surprises are in the above chart. Maybe the most strange is that compiler-optimized Gentoo (the solid line) performs a lot better on Twofish but also a lot worse on CAST5.

Next library above is libmcrypt. This chart verifies that mcrypt has very slow start-up times and not only on Gentoo, but on all distributions. The chart excludes some cipher (XTEA, Safer+ and Loki97) to increase readability. Again some ciphers show very little variation in throughput speed: Rijndael, CAST6 and 3DES. All others also show no great surprises.

Botan and Tomcrypt show the same effects. Some cipher implementations perform nearly equivalently on all distributions, others show a larger but no huge variation.

The corresponding chart for Crypto++ is very full and shows a wide variation even of ciphers previously unvarying. Crypto++ seems to be very sensitive to optimization. Nettle's chart shows the same observations as before.

The two remaining library are OpenSSL and Beecrypt. OpenSSL shows that it's cipher implementations perform almost unvaryingly well on all distributions. This promises good performance for SSL secure sockets on all distributions.

Beecrypt shows only one new aspect: the Blowfish implementation on Debian-lenny shows a serious fall as compared to Debian-etch. This is probably due to the gcc compiler version change to 4.2. More about compilers and compiler flags later.

5.3.1 Sub-Conclusion

So which distribution performs best? To analyze this question, the speed table was created for each distribution. It contains the maximum value of each plot, the maximum speed the cipher reached. Then the average speed of all cipher / library test runs performed on one Linux distribution is calculated. The table below shows this average and the average over all test runs. The values below the average are (minimum - maximum) speed across all ciphers implemented in the library. Again all values are in KB/s.

| gcrypt | mcrypt | botan | cryptopp | openssl | nettle | beecrypt | tomcrypt | custom | average | relative | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| p4-3200-gentoo | 11,449 | 18,155 | 24,157 | 23,890 | 35,263 | 24,011 | 38,125 | 26,310 | 32,494 | 23,610 | 100% |

| (5,015 - 22,283) | (3,834 - 44,355) | (6,698 - 40,781) | (6,744 - 50,448) | (12,070 - 47,299) | (4,940 - 35,514) | (23,588 - 52,662) | (3,463 - 49,685) | (29,171 - 35,817) | |||

| p4-3200-ubuntu-hardy | 12,192 | 18,175 | 20,412 | 24,390 | 33,743 | 19,161 | 36,411 | 28,477 | 32,077 | 22,941 | 2.8% |

| (6,490 - 19,975) | (3,468 - 40,554) | (6,007 - 39,000) | (3,304 - 41,518) | (11,707 - 45,198) | (2,438 - 30,706) | (23,285 - 49,538) | (3,570 - 52,340) | (26,935 - 37,219) | |||

| p4-3200-debian-lenny | 12,384 | 15,044 | 20,480 | 24,459 | 33,179 | 21,071 | 25,094 | 28,290 | 31,819 | 22,140 | 6.2% |

| (6,564 - 20,171) | (3,017 - 32,796) | (5,920 - 38,985) | (3,345 - 41,500) | (11,972 - 45,110) | (2,393 - 34,414) | (23,591 - 26,597) | (3,523 - 52,293) | (27,296 - 36,341) | |||

| p4-3200-ubuntu-gutsy | 11,261 | 18,176 | 19,759 | 18,051 | 33,844 | 20,928 | 36,354 | 26,544 | 31,904 | 21,620 | 8.4% |

| (3,804 - 21,024) | (3,461 - 40,597) | (6,296 - 37,651) | (4,336 - 31,028) | (11,878 - 45,176) | (2,434 - 34,379) | (23,293 - 49,416) | (3,036 - 51,821) | (28,183 - 35,626) | |||

| p4-3200-fedora8 | 11,140 | 17,069 | 23,755 | 19,249 | 32,225 | 25,121 | 32,960 | 21,229 | 29,038 | 21,313 | 9.7% |

| (2,246 - 20,412) | (3,337 - 41,066) | (7,311 - 35,071) | (7,241 - 34,668) | (11,974 - 45,253) | (3,868 - 43,517) | (20,742 - 45,177) | (3,051 - 47,499) | (24,503 - 33,573) | |||

| p4-3200-debian-etch | 10,899 | 15,049 | 18,898 | 12,523 | 33,814 | 21,324 | 36,537 | 26,862 | 32,630 | 20,179 | 14.5% |

| (3,660 - 19,020) | (2,990 - 32,804) | (6,425 - 32,452) | (4,439 - 42,647) | (11,805 - 45,298) | (2,559 - 34,795) | (23,517 - 49,558) | (3,448 - 51,800) | (29,106 - 36,155) |

Obviously Gentoo is the fastest distribution. No surprise here, the libraries were compiled from source with high optimization levels.

The only other result seen here is that "newer" distributions (ubuntu-hardy and debian-lenny) perform better than older one. This is probably due to the compiler version bump from gcc 3.4.x to gcc 4.1.x. More about that in the section Compiler and Optimization Flags.

See the external table file for a detailed speed table listing for all distributions.

5.4 Ciphers compared by CPU

The tests discussed in the last three sections (ciphers, libraries and distribution comparisons) were all performed on my development computer. It has a Pentium 4 CPU at 3.2 GHz. To determine if any of the previous results are due to special attributes of the Pentium 4 architecture, the speed test was repeated on four other CPUs / computers. To make the comparison independent of the Linux distribution, Debian etch was installed on all computers (chrooted on some). The plots below display results of the speed test on the five CPUs side by side. The sixth plot shows results from my Pentium 4 run with Gentoo, instead of Debian etch; these are the same plots as in the section "Ciphers Compared" just for comparison.

Again we address libgcrypt's results first. All five results from Debian etch look similar. From p2-300 to p3-1000 the cipher's speed increases twofold (Rijndael from 2 MB/s to 7 MB/s), but all relative speeds are unchanged. Also cel-2660 and p4-3200 show very much the same picture, scaled only by the increased CPU speed. Yet these two charts pairs (p2-300/p3-1000 vs. cel-2660/p4-3200) show different relative speeds: most obvious Twofish is best on p2-300/p3-1000 but CAST5 wins on cel-2660/p4-3200. More interesting is the fact that the three ciphers Blowfish, Serpent and 3DES don't scale with CPU speed as well as the other three do. ath-2000 shows a third picture, different from p2-300/p3-1000 and cel-2660/p4-3200. 3DES and Serpent are actually faster on the ath-2000 than on p4-3200. These implementation seem to work better with AMD's CPUs than Intel's.

On all CPUs libmcrypt on Debian etch shows the same slow start-up. Since this rules out the library for almost all purposes, I will not go into more detail on the CPU comparison.

Next we regard a faster library: Botan. Again the CPUs' results form three distinct groups with equal relative speeds: p2-300/p3-1000, cel-2660/p4-3200 and ath-2000. But compared to libgcrypt the relative speeds change less: only Serpent and Twofish show large changes from CPU to CPU. Again ath-2000 shows better relative speed results for these cipher than the faster CPUs cel-2660/p4-3200. Interesting is also the comparison of Debian etch with Gentoo on the p4-3200: the plots show almost equal relative performance with Gentoo's higher optimization; with one exception: Serpent performs four times as fast on Gentoo.

Crypto++ is the next library in the speedtest. We already saw that Crypto++ is very sensitive to optimization flags. Looking at the five charts, p3-1000 immediately falls into the eye: Twofish is the fastest cipher only on that CPU, all others show very high Blowfish speeds instead. Blowfish is almost twice as fast on those CPUs than the next fastest cipher: Rijndael. For a more detailed analysis the above plots were regenerated without the Blowfish data set.

Without Blowfish the other ciphers show almost equal relative performance on the four CPUs p2-300, ath-2000, cel-2660, p4-3200. But even on the other ciphers the CPU p3-1000 performs differently. Most notably the Twofish cipher reaches almost 12 MB/s on p3-1000, but only 5 MB/s on ath-2000. Why this CPU walks out of the line is beyond me.

OpenSSL's highly optimized cipher implementations perform very well on all tested CPUs. Again this promises very good SSL socket speeds on all x86 CPUs. No further important observations are found on these charts.

Beecrypt's results can again be grouped into three similar charts: p2-300/p3-1000, ath-2000 and cel-2660/p4-3200. Like on libgcrypt, some ciphers (Serpent, 3DES) do not speed-up as well as others: Rijndael, CAST5, Blowfish and Twofish utilize the faster CPUs better. And the Athlon does a better job with the less-scalable ciphers than Intel's CPUs.

Tomcrypt shows the same results as already seen on libgcrypt, Beecrypt and less prominently on the other result comparisons.

5.4.1 Sub-Conclusion

What do we conclude from the cross-CPU examination? First and most important point is that the performance of an individual cipher does not depend on specific the CPU architecture. The speed usually scales well with CPU speed. However there are exceptions: some cipher implementations do not scale as well as others. Most often 3DES and Serpent show less relative performance gain.

Second interesting point is to determine the cipher which scales best. This requires a short calculation, because we need to account for the CPU's speedup. Thus the first step is to calculate the relative speed-up of each CPU. So first the average speed over all speed tests on all CPUs is taken: all-average in the following table. Then the average speed over all tests on each individual CPU is calculated and from that the relative speed to all-average is calculated: e.g. p3-1000 reaches only 65.2% of the all-average speed.

| average | relative | |

|---|---|---|

| all-average | 11,609 | |

| p2-300-debian-etch | 2,053 | 17.7% |

| p3-1000-debian-etch | 7,574 | 65.2% |

| ath-2000-debian-etch | 11,591 | 99.8% |

| cel-2660-debian-etch | 16,645 | 143.4% |

| p4-3200-debian-etch | 20,179 | 173.8% |

Then the average performance of each cipher is calculated again across all CPUs and for each CPU individually. Of course only the libraries are taken into account which actually implement the cipher.

In the last step, for each cipher to average performance of all CPUs is scaled down by the speed-up multiplier calculated above to get the linear scaled, expected speed of the cipher. This expected speed is then compared to the actually measured speed: negative values show less than expected speed, positive show a larger speed-up. The difference is shown in the table below, the sum of all differenced to the expected performance signifies how well the CPU is suited for (the tested) cryptography algorithms.

| average | cast5 | cast6 | 3des | blowfish | rijndael | xtea | twofish | serpent | total | |

|---|---|---|---|---|---|---|---|---|---|---|

| all-average | 11,609 | 15,525 | 7,356 | 3,281 | 20,369 | 15,348 | 9,869 | 13,607 | 6,732 | |

| relative to average | 133.7% | 63.4% | 28.3% | 175.5% | 132.2% | 85.0% | 117.2% | 58.0% | ||

| p2-300-debian-etch | 2,053 | 2,714 | 1,332 | 605 | 3,459 | 2,705 | 1,584 | 2,596 | 1,336 | |

| expected | 17.7% | 2,746 | 1,301 | 580 | 3,603 | 2,715 | 1,746 | 2,407 | 1,191 | |

| difference | -32 | 30 | 24 | -144 | -10 | -162 | 189 | 145 | 41 | |

| p3-1000-debian-etch | 7,574 | 9,597 | 5,617 | 1,988 | 12,335 | 9,647 | 5,921 | 10,558 | 5,326 | |

| expected | 65.2% | 10,130 | 4,800 | 2,141 | 13,291 | 10,014 | 6,439 | 8,878 | 4,392 | |

| difference | -532 | 817 | -153 | -955 | -367 | -518 | 1,680 | 933 | 905 | |

| ath-2000-debian-etch | 11,591 | 15,162 | 7,015 | 3,404 | 20,625 | 14,602 | 9,387 | 13,534 | 8,715 | |

| expected | 99.8% | 15,501 | 7,345 | 3,276 | 20,338 | 15,324 | 9,854 | 13,586 | 6,721 | |

| difference | -340 | -330 | 128 | 287 | -723 | -467 | -52 | 1,994 | 497 | |

| cel-2660-debian-etch | 16,645 | 22,688 | 10,292 | 4,813 | 29,502 | 22,529 | 14,659 | 18,718 | 8,255 | |

| expected | 143.4% | 22,260 | 10,548 | 4,704 | 29,207 | 22,007 | 14,151 | 19,510 | 9,652 | |

| difference | 428 | -256 | 108 | 295 | 523 | 508 | -792 | -1,397 | -583 | |

| p4-3200-debian-etch | 20,179 | 27,463 | 12,526 | 5,595 | 35,925 | 27,256 | 17,795 | 22,626 | 10,026 | |

| expected | 173.8% | 26,986 | 12,787 | 5,703 | 35,407 | 26,679 | 17,155 | 23,652 | 11,702 | |

| difference | 476 | -261 | -108 | 517 | 577 | 639 | -1,026 | -1,676 | -860 | |

| min | -532 | -330 | -153 | -955 | -723 | -518 | -1,026 | -1,676 | ||

| max | 476 | 817 | 128 | 517 | 577 | 639 | 1,680 | 1,994 | ||

| normalized min | -398 | -521 | -541 | -544 | -547 | -610 | -875 | -2,890 | ||

| range | 1,009 | 1,148 | 281 | 1,473 | 1,300 | 1,158 | 2,706 | 3,670 | ||

| normalized range | 754 | 1,811 | 993 | 839 | 984 | 1,362 | 2,309 | 6,329 |

From the differences to the expected performance the cipher best suited for all tested CPU can be determined: the worst-case is compared (highest negative performance speed-up). However because the min values are in KB/s speed a direct comparison is not valid: faster ciphers bring larger differences to the expected speed. The minimum speed difference has to be normalized by the average cipher's speed to allow a direct comparison. The same normalization is done for the (min - max) range size, which shows how large the cipher's speed fluctuation is.

So obviously p3-1000 is the CPU most suited for cipher algorithms. It performs on average 113 KB/s faster than the others. However compared to the actual speed of 2-10 MB/s this speed-up is not substantial.

The cipher performing best relative to all CPUs is CAST5. It has the least break-in of speed when run on all CPUs. Next are CAST6 and 3DES, which also show solid performance regardless of the CPU. Most fragile to CPU architecture is Serpent; it shows almost 1.676 kB/s less speed on the p4-3200 than expected.

Surprising is that 3DES shows the least fluctuation: the range of its speed differences is only 281 KB/s. On all CPUs 3DES performs almost exactly as expected by the average. However relative to 3DES's slow speed this range is not that small. The normalized ranges of CAST5, 3DES, Blowfish and Rijndael all show that these ciphers are quite independent of the CPU. Again Serpent shows the largest range of speed differences.

See the external table file for a detailed speed table listing for all CPUs.

5.5 Compiler and Optimization Flags

The last collection of test results are centered on the question "How important is the compiler and compiler flags for the encryption speed?". This question already arises above during the comparisons of different distributions. Here the binary package maintainer or in case of Gentoo the distribution user sets the (gcc) compiler flags used to compile the library source code.

To examine the compiler flags influence the cipher source code was compiled using all the 35 different flags shown in table "Compiler and Flags Tested". As stated above the biggest problem was to verify that the build scripts (configure + make) of the library actually passed the flags on to the compiler.

To improve readability of the following plots only a subset of all compiler flags are displayed. The longer gcc compiler flag sequences are shortened to allow compact display in the legend:

| Shortened | Flags |

|---|---|

-O2 p4 | -O2 -march=pentium4 |

-O3 p4 | -O3 -march=pentium4 |

-O2 p4 ofp | -O2 -march=pentium4 -fomit-frame-pointer |

-O3 p4 ofp | -O3 -march=pentium4 -fomit-frame-pointer |

-O2 p4s ofp | -O2 -march=pentium4 -msse -msse2 -msse3 -mfpmath=sse -fomit-frame-pointer |

-O3 p4s ofp | -O3 -march=pentium4 -msse -msse2 -msse3 -mfpmath=sse -fomit-frame-pointer |

-O2 p4s ofp ul | -O2 -march=pentium4 -msse -msse2 -msse3 -mfpmath=sse -fomit-frame-pointer -funroll-loops |

-O3 p4s ofp ul | -O3 -march=pentium4 -msse -msse2 -msse3 -mfpmath=sse -fomit-frame-pointer -funroll-loops |

Of the above flags, only the -O3 variants are included in the following plots.

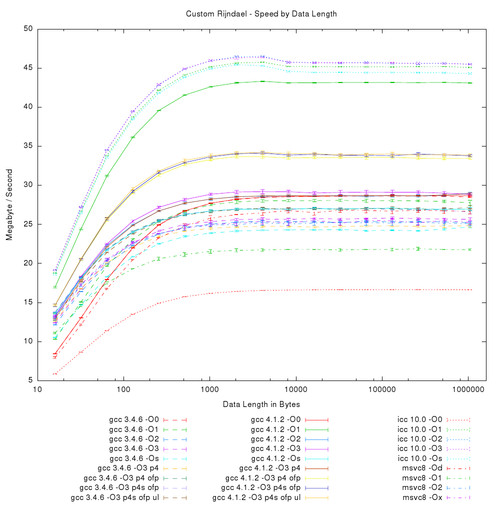

The first three plots compare compiler flags based on the three custom cipher implementations. First is the Rijndael implementation, which already shows the main trends of the compiler and flags comparison: Intel's C++ compiler generates the fastest code. Next best is gcc with the highest level of optimization. Microsoft Visual C++ passes somewhere in the middle field.

Another important observation is that the gcc 4.1.2 -O3 p4 ofp flag combination performs nearly equal to "-O3 p4s ofp" and "-O3 p4s ofp ul". This means that the flags -funroll-loops and -msse -msse2 -msse3 -mfpmath=sse does not change performance.

An outlier result is the one generated by gcc 4.1.2 -O1: it show way faster performance that all other gcc results. The reason for this fast result is unknown: less optimization seems to do some ciphers (here Rijndael) good.

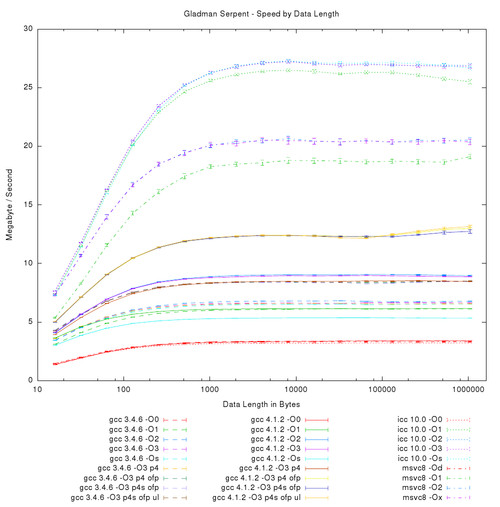

Second custom implementation is Gladman's Serpent code. Again Intel's C++ compiler wins the race by a long shot. This time the second place goes to Microsoft's Visual C++, which also shows a large winning margin against gcc.

Interesting here is that all three compiler perform nearly the same when optimization is disabled: the red lines are almost equal.

gcc again shows large performance gains from more compiler flags, peaking again with gcc 4.1.2 -O3 p4 ofp.

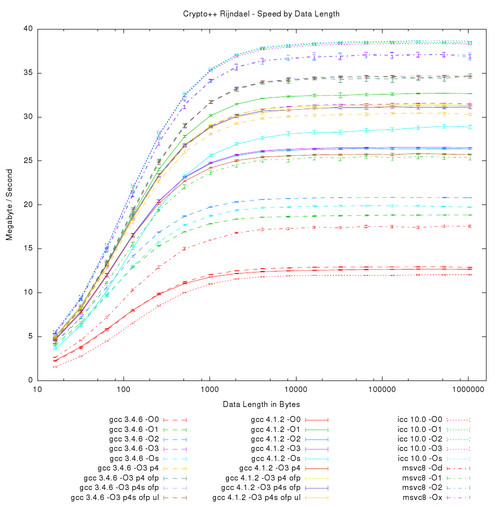

The custom cipher code extracted from Botan is an interesting candidate for optimization: it mainly contains eight substitution box functions, the transformation and support functions of which all are declared static inline.

Lots of room for optimizations like instruction schedueling, reordering and register allocation. However the cipher code contains only few branches and loops. Except for the loop over the 256-bit blocks no branches are contained in the main execution part.

The plot shows again Intel's compiler to provide highest optimization. The second place goes this time to gcc, but only with the highest optimization flags level in the test. Third is Visual C++.

Remarkable is the large difference between the winning combinations, which are above 20 MB/s, and the middle field of gcc flag combinations: they all show speeds smaller than 10MB/s. The jump from 10MB/s to more than 20MB/s happens when -fomit-frame-pointer is added to the flags. This was also visible in the last two plot, but the jump is really large in the current plot.

Again gcc 4.1.2 -O1 shows a result breaking out of the middle field. This time it does not reach -O3 p4 ofp levels.

Now we study the results of cipher implementations in the Crypto++ library. First up is Rijndael.

The plot shows a much larger spread of results than the three custom implementations. Again the winning order is Intel's followed by Visual C++ and gcc. However the winning speed results are much closer together than in the last three tests.

gcc 4.1.2 -O1 again shows larger speed optimization than -O3 ofp combinations. But again the difference is smaller than before.

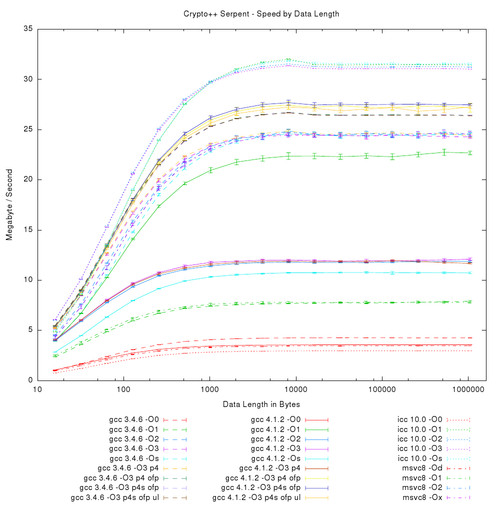

Crypto++'s implementation of Serpent shows very much the same results as MyBotan Serpent: icc best, gcc with -O3 ofp second and msvc third. Again gcc 4.1.2 -O1 shows a special performance.

This time gcc 3.4.6 also shows good speed results, nearly reaching gcc 4.1.2. In the preceding tests gcc 3.4.6 did not show good performance compared to the other results.

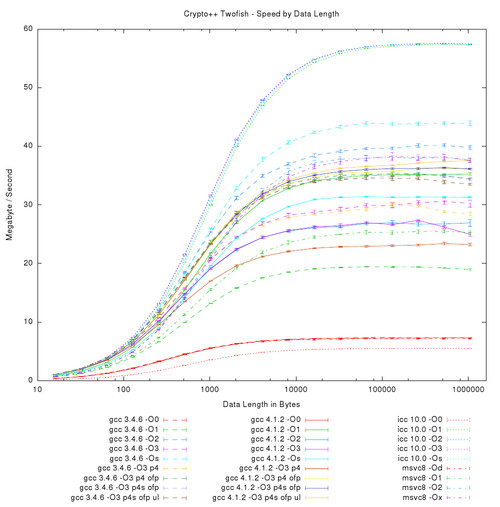

The plot above compares by the Twofish implementation in Crypto++. It shows the same findings as in the previous plots.

The PDF plot file contains six more comparisons with different ciphers from Crypto++. All show the same observations as the first six and are therefore omitted here. Check the PDF or tarball for the other charts.

5.5.1 Sub-Conclusion

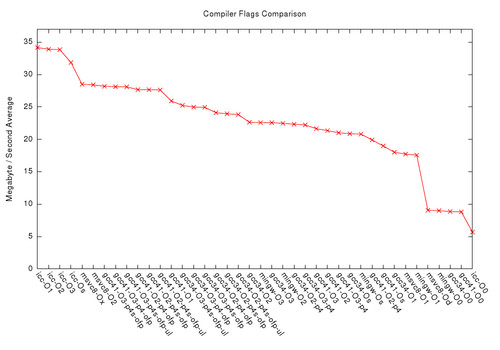

The central point of interest in this section is to find the fastest compiler / compiler flags combination for all ciphers. For this comparison the speed of all ciphers are averaged for each compiler flags combination. The only other calculation of interest is to see how much slower the other compilers are. So each total average is also displayed relative to the fastest compiler / flags combination.

| average | relative | my-rijndael | gladman-serpent | mybotan-serpent | cryptopp-rijndael | cryptopp-serpent | cryptopp-twofish | ... | |

|---|---|---|---|---|---|---|---|---|---|

| icc-O1 | 34,977 | 100.00% | 46,864 | 27,123 | 30,029 | 39,456 | 32,779 | 58,783 | ... |

| icc-O2 | 34,713 | 99.25% | 47,630 | 27,905 | 30,195 | 39,638 | 32,291 | 58,961 | ... |

| icc-O3 | 34,653 | 99.07% | 47,534 | 27,873 | 30,196 | 39,283 | 32,112 | 58,979 | ... |

| icc-Os | 32,620 | 93.26% | 46,541 | 27,950 | 8,254 | 39,510 | 32,626 | 58,911 | ... |

| msvc8-Ox | 29,168 | 83.39% | 26,135 | 21,027 | 22,888 | 38,032 | 25,234 | 39,159 | ... |

| msvc8-O2 | 29,098 | 83.19% | 25,895 | 21,155 | 22,642 | 37,967 | 25,312 | 39,015 | ... |

| gcc41-O3-p4s-ofp | 28,863 | 82.52% | 34,955 | 13,040 | 26,198 | 31,906 | 28,338 | 37,214 | ... |

| gcc41-O3-p4-ofp | 28,790 | 82.31% | 34,493 | 13,290 | 26,846 | 32,154 | 28,071 | 37,091 | ... |

| gcc41-O3-p4s-ofp-ul | 28,770 | 82.25% | 35,025 | 13,457 | 26,690 | 32,065 | 27,919 | 38,452 | ... |

| gcc41-O2-p4-ofp | 28,327 | 80.99% | 33,855 | 12,723 | 26,763 | 31,633 | 27,958 | 37,086 | ... |

| gcc41-O2-p4s-ofp | 28,324 | 80.98% | 34,230 | 12,653 | 26,539 | 32,095 | 27,190 | 37,493 | ... |

| gcc41-O2-p4s-ofp-ul | 28,287 | 80.87% | 34,160 | 12,816 | 26,820 | 32,039 | 27,489 | 37,109 | ... |

| gcc41-O1 | 26,537 | 75.87% | 44,357 | 6,293 | 20,535 | 33,495 | 23,282 | 36,114 | ... |

| gcc34-O3-p4s-ofp-ul | 25,837 | 73.87% | 27,841 | 8,709 | 7,174 | 35,445 | 27,308 | 35,370 | ... |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

The table above shows only the first rows and columns of the complete table. See the external web page for the full speed table listing for all compiler flags.

Obviously Intel's C++ compiler is the fastest, it shows about the same performance gain for -O1, -O2 and -O3. When size optimization is enabled -Os the speed drops about 7%.

Second best compiler in the test is Microsoft's Visual C++ 8.0: the code it creates performes roughly 16.5% slower than that created by Intel's compiler. Again the maximum optimization flag /Ox and /O2 shows about equal performance.

But close behind is gcc 4.1.2 with the flags combination -O3 p4s ofp, which creates 17.5% slower code than Intel's compiler. The older gcc version 3.4.6 is an amazing 26% slower than Intel's top mark.

However relative to gcc 4.1.2 the older compiler version 3.4.6 is only 10% slower. This is an interesting result, especially in view of early reports on gcc 4.x to show poorer optimization than the tried-and-true old version 3.4.x. This opinion was very popular for 4.0.x versions of gcc. At least in the cipher code case, this does not hold for 4.1.x.

A nice graphical overview of compiler speed is shown below: all average speed results are plotted by compiler / compiler flags combinations. The average speed results are sorted to show a monotone decreasing speed line.

Obvious jumps in the speed line are from icc to the others at 34 MB/s to 28 MB/s. Followed by a smaller jump between the gcc 4.1.2 and 3.4.6 results around 26 MB/s. And the last large jump down to less than 10 MB/s which is due to test results without any optimization flags activated (-O0).

Another interesting observation is that many gcc flags have no effect on the cipher code generation. This is seen by the long steady intervals with minimum sloping.

6 Conclusion

In this section some of the results observed above are rediscussed to form a final conclusion.

In section Ciphers Compared each of the 15 compared ciphers are evaluated on Gentoo. The average speed across all libraries implementing a particular cipher is calculated. Blowfish turned out to be the fastest cipher in the test. However selecting a cipher for a specific purpose must regard more parameters than the raw speed. More important is a cipher's strength as it is widely accepted by cryptography experts. Nevertheless the numbers are a concrete basis for cipher selection.

When regarding the selected cryptography Libraries Compared by Cipher large differences become visible. One would expect all libraries to contain about the same cipher implementations, as all calculation results have to be the same. However performance varies greatly, and the variation is not due to compiler flags or other external problems.

All OpenSSL's cipher implementations show high levels of optimizations, thus promising good performance for SSL sockets. Beecrypt implements only two ciphers, but these two implementations show very high speed: Beecrypt's Blowfish implementation reaches 52 MB/s, the highest speed result in the whole test. Tomcrypt provides the largest number of ciphers and consistently good performance on all of them. Botan and Crypto++ show similar speed results, each having some fast and some slower cipher implementations. The small Nettle is rather old and thus probably contains more out-dated, slower implementations.

The first real surprise of the speed comparison is the extremely slow test results measured on all ciphers implemented in libmcrypt and libgcrypt. libmcrypt's ciphers show an extremely long start-up overhead, but once it is amortized the cipher's throughput is equal to the other, faster libraries. libgcrypt's results on the other hand are really abysmal and trail far behind all other libraries. This does not bode well for GnuTLS's SSL socket's performance.

Next Findings On Different Distributions are discussed to put the previous speed results, which were all measured on Gentoo, into perspective. The result shows that Gentoo really does perform faster than the others, probably due to the high optimization flags selected during source compilation of the libraries. Gentoo is followed by the newer versions of Ubuntu (hardy) and Debian (lenny). Fedora and Ubuntu gutsy perform about equally. The oldest distribution Debian etch takes the last place, showing almost 15% slower speed results than Gentoo.

The section Ciphers compared by CPU was included to make sure that the results collected on the primary testing computer would be transferable onto other systems. This proved to be the case. Little difference other than the expected relative speed scaling was observable for other CPUs. Most importantly no cache effects or special speed-ups were detectable. Most robust cipher was CAST5 and the one most fragile to CPU architecture was Serpent.

Most interesting for other applications outside the scope of cipher algorithms was the Compiler and Optimization Flags comparison. It showed that Intel's C++ compiler produces by far the most optimized code for all ciphers tested. Second and third place goes to Microsoft Visual C++ 8.0 and gcc 4.1.2, which generate code which is roughly 16.5% and 17.5% slower than that generated by Intel's compiler. gcc's performance is highly dependent on the amount to optimization flags enabled: a simple -O3 is not sufficient to produce well optimized binary code.

Doesn't PKIF (pkif.sourceforge.net) have all/some of the algorithms you used? Why didn't you try that one? Is it using one of the same libraries or something?

I only mention it because it seems more notable than some of the libraries that you did test. It has EAL 4 certs..