Speed Test Results

Experiment

The speed test compares the libstdc++ STL red-black tree with the implemented B+ tree with many different parameters. The newer STL hash table container from the __gnu_cxx namespace is also tested against the two trees. To keep focus on the algorithms and reduce data copying the multiset specializations were chosen. Note that the comparison between hash table and trees is somewhat unfair, because the hash table does not keep the keys sorted, and thus cannot be used for all applications.Three set of test procedures are used: the first only inserts n random integers into the tree / hash table. The second test first inserts n random integers, then performs n lookups for those integers and finally erases all n integers. The last test only performs n lookups on a tree pre-filled with n integers. All lookups are successful.

These three test sequences are preformed for n from 125 to 4,096,000 where n is doubled after each test run. For each n the test cycles are run until in total 8,192,000 items were inserted / lookuped. This way the measured speed for small n is averaged over up to 65,536 sample runs.

Lastly it is purpose of the test to determine a good node size for the B+ tree. Therefore the test runs are performed on different slot sizes; both inner and leaf nodes hold the same number of items. The number of slots tested ranges from 4 to 256 slots and therefore yields node sizes from about 50 to 2,048 bytes. This requires that the B+ tree template is instantiated for each of the probed node sizes!

The speed test source code is compiled with g++ 4.1.2 -O3 -fomit-frame-pointer

- Attention:

- Compilation of the speed test with -O3 takes very long and requires much RAM. It is not automatically built when running "make all".

The results are be displayed below using gnuplot. All tests were run on a Pentium4 3.2 GHz with 2 GB RAM. A high-resolution PDF plot of the following images can be found in the package at speedtest/speedtest.pdf

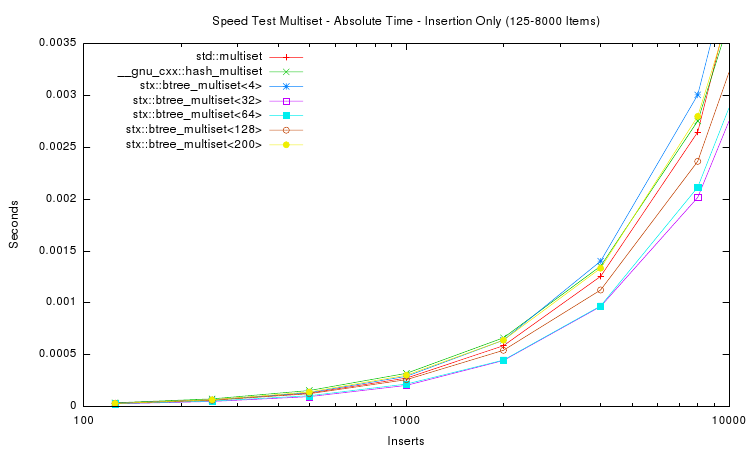

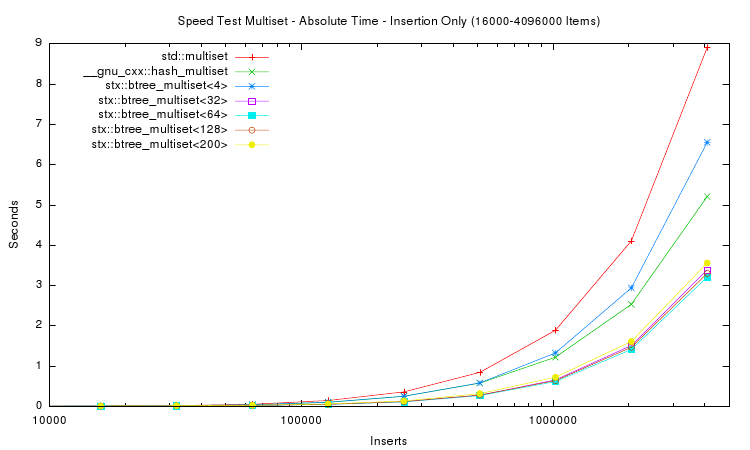

The first two plots above show the absolute time measured for inserting n items into seven different tree variants. For small n (the first plot) the speed of red-black tree and B+ tree are very similar. For large n the red-black tree slows down, and for n > 1,024,000 items the red-black tree requires almost twice as much time as a B+ tree with 32 slots. The STL hash table performs better than the STL map but not as good as the B+ tree implementations with higher slot counts.

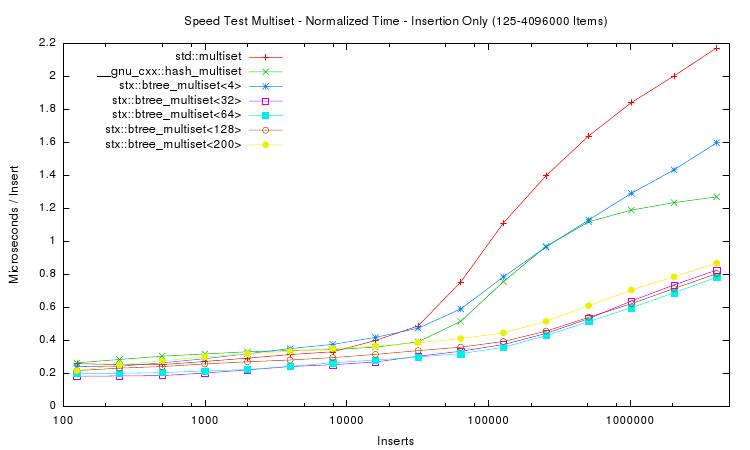

The next plot shows the insertion time per item, which is calculated by dividing the absolute time by the number of inserted items. Notice that insertion time is now in microseconds. The plot shows that the red-black tree reaches some limitation at about n = 16,000 items. Beyond this item count the B+ tree (with 32 slots) performs much better than the STL multiset. The STL hash table resizes itself in defined intervals, which leads to non-linearly increasing insert times.

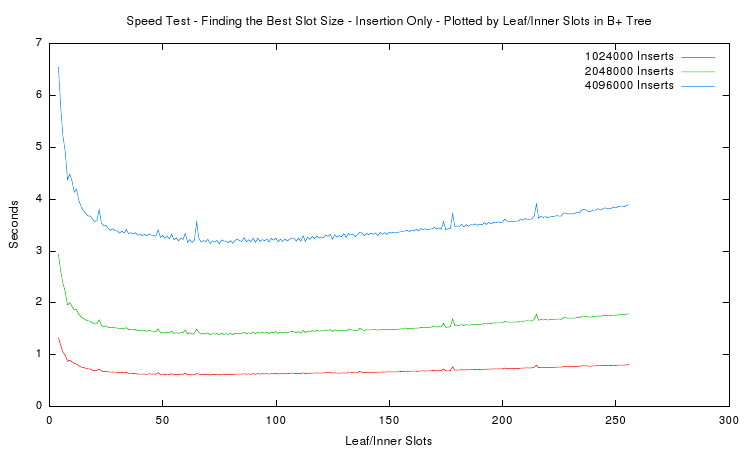

The last plots goal is to find the best node size for the B+ tree. It displays the total measured time of the insertion test depending on the number of slots in inner and leaf nodes. Only runs with more than 1 million inserted items are plotted. One can see that the minimum is around 65 slots for each of the curves. However to reduce unused memory in the nodes the most practical slot size is around 35. This amounts to total node sizes of about 280 bytes. Thus in the implementation a target size of 256 bytes was chosen.

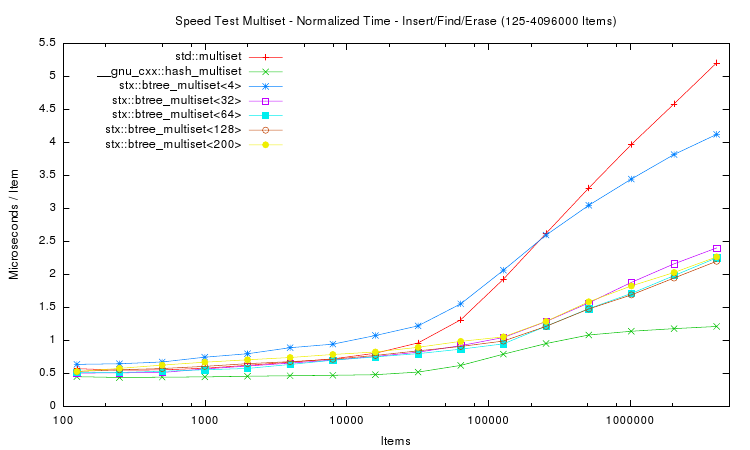

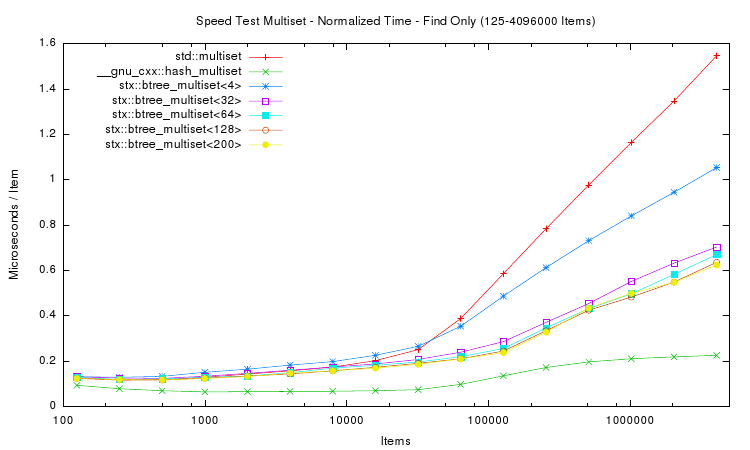

The following two plots show the same aspects as above, except that not only insertion time was measured. Instead in the first plot a whole insert/find/delete cycle was performed and measured. The second plot is restricted to the lookup / find part.

The results for the trees are in general accordance to those of only insertion. However the hash table implementation performs much faster in both tests. This is expected, because hash table lookup (and deletion) requires fewer memory accesses than tree traversal. Thus a hash table implementation will always be faster than trees. But of course hash tables do not store items in sorted order. Interestingly the hash table's performance is not linear in the number of items: it's peak performance is not with small number of items, but with around 10,000 items. And for item counts larger than 100,000 the hash table slows down: lookup time more than doubles. However, after doubling, the lookup time does not change much: lookup on tables with 1 million items takes approximately the same time as with 4 million items.

1.5.6

1.5.6