Publishing STX B+ Tree 0.9 - Speed Gains over 0.8.6

Posted on 2013-05-07 21:16 by Timo Bingmann at Permlink with 0 Comments. Tags: #stx-btree

This blog post announces the new version 0.9 of my popular STX B+ Tree library of C++ templates, speedtests and demos. Since the last release in 2011, many patches and new ideas have accumulated. Here a summary of the most prominent changes:

- Changed the

find_lower()function, which is central to finding keys or insertion points to not use binary search for small node sizes. The reasoning behind this change is discussed in another blog post. - Added a

bulk_load()to allmapandsetvariants to construct a B+ tree from a pre-sorted iterator range. The sorted range is first copied into leaf nodes, over which then the B+ tree inner nodes are iteratively built. - The B+ tree template source code is now published under the Boost Software License! Use it!

- More minor changes are listed in the ChangeLog.

STX B+ tree version 0.9 is available from the usual project webpage.

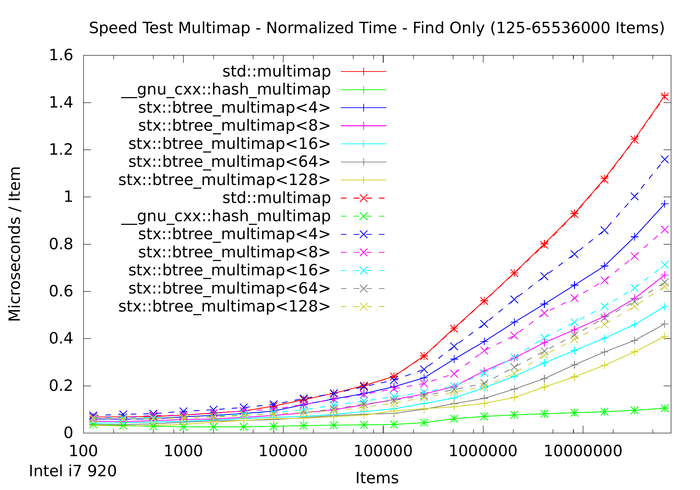

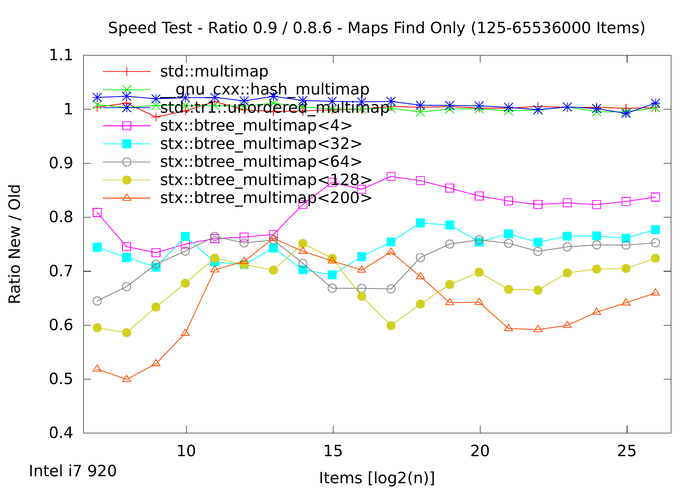

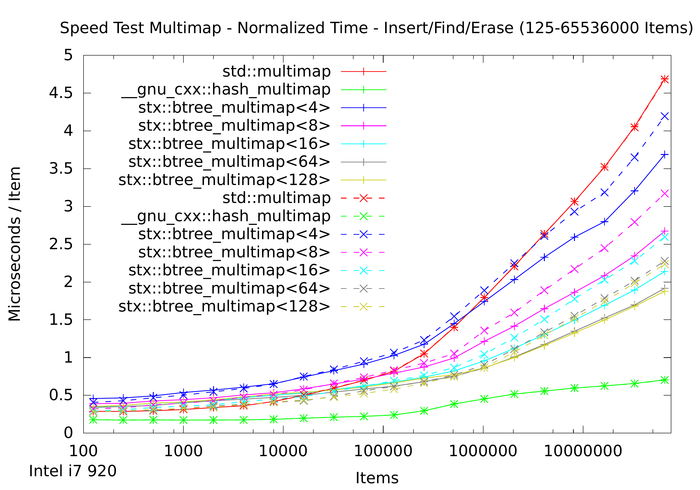

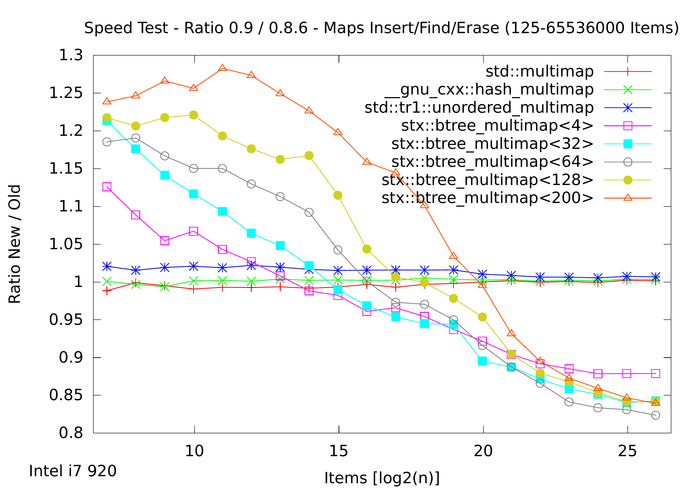

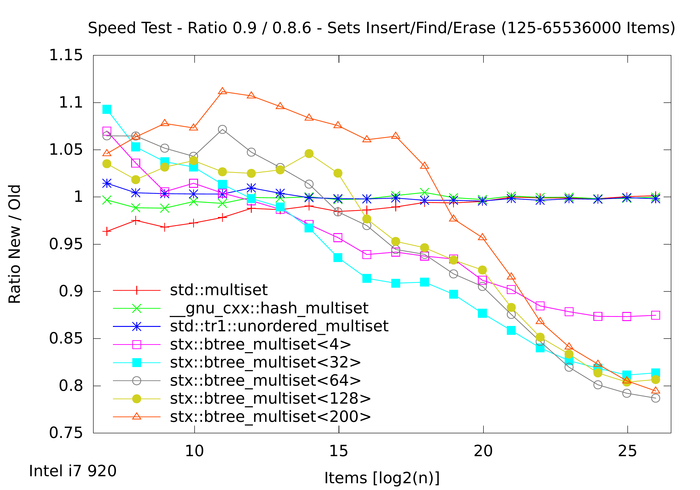

The switch from binary search to linear scan, and all further patches and optimization call for a direct comparison of version 0.8.6 and 0.9. Because of special optimizations to the btree_set specializations, the following plots differentiate between set and maps.

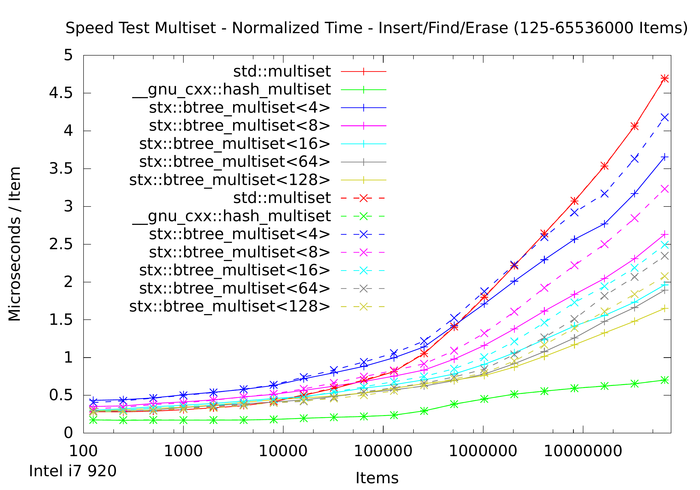

The full results PDF is downloadable by clicking one the plots, and is also included in the stx-btree tarball. The following plots show results from the integer pair speeds where dashed lines are from version 0.8.6 while solid lines are from 0.9. All speedtests where run on the Intel i7 920, which is described in more detail on the stx-btree speedtest page.

The largest difference between 0.8.6 and 0.9 can be seen in the find() only plot of the multimap container:

First notice that both the STL map and hash maps have identical results. However, the B+ tree implementation show a significant performance gain of about 20-30% from the optimizations introduced in 0.9: mainly the linear scanning of nodes!

The overall performance of an insertion/find/deletion cycle is also improved, however not as much as pure lookup speed. However, the picture is not only positive: the patches in version 0.9 actually have a speed decrease for small number of items, smaller than the CPU's cache size. This is due to calling std::copy when copying key/value items. Because the B+ tree is meant for large sets of items, and the absolute times for the small item sets is relatively low, this patch was still included. For other data types than integers, the call of std::copy will have a greater performance benefit than this ratio plot suggests!

The 0.9 release also includes some specific optimizations for the set specialization: a lot of superfluous copying is now omitted, which was due to sizeof(empty_struct) = 1, instead zero. Thus the overall performance improvement of the insertion/find/deletion cycle of the set variant is somewhat higher than that of map. This is specially true for the default node size of <64>, where 0.9 is about 20% faster than 0.8.6 in the largest tests.

Cheers.